RAG Architecture Demystified: Building Smarter AI Applications

A practical guide to implementing production-ready Retrieval-Augmented Generation with LangChain

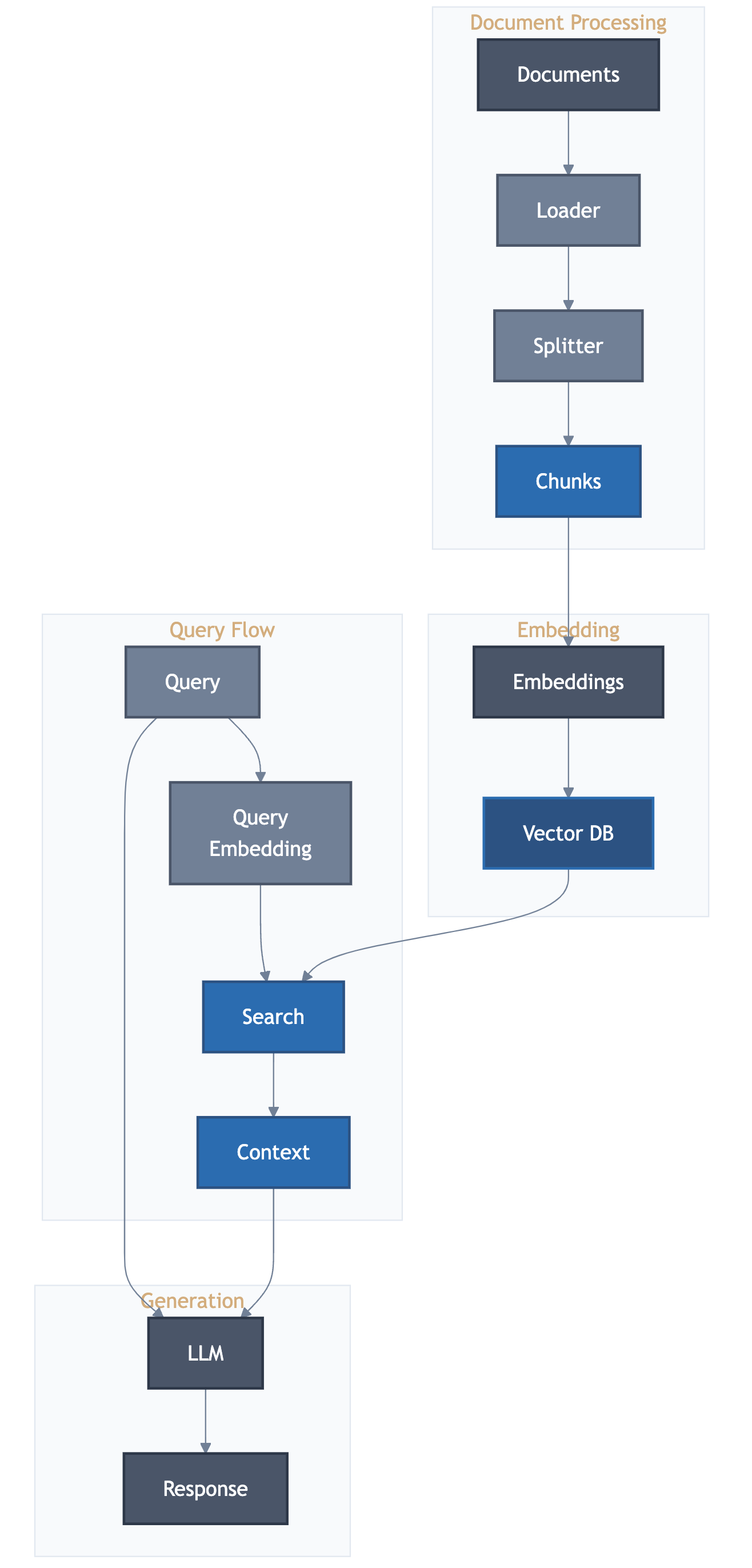

Ever wished your AI applications could leverage your organization's knowledge base to provide more accurate, contextual responses? That's exactly what Retrieval-Augmented Generation (RAG) offers. Instead of relying solely on an AI model's training data, RAG enables your applications to reference your specific documents, databases, and internal knowledge when generating responses. Think of it as giving your AI system access to your organization's collective memory.

Why RAG Matters

Imagine asking a customer service AI about your company's latest product features. Without RAG, it might provide generic or outdated information based on its training data. RAG can pull information directly from your current product documentation, ensuring accurate and up-to-date responses. This capability transforms applications across various domains:

Customer Support: Accurate responses based on current documentation

Research & Development: Quick insights from internal research papers

Legal & Compliance: Precise information from latest policy documents

Knowledge Management: Efficient access to organizational expertise

Building Blocks: The Essential RAG Stack

After implementing several RAG systems, I've found a streamlined technology stack that delivers reliable results:

Framework and Storage Solutions Comparison

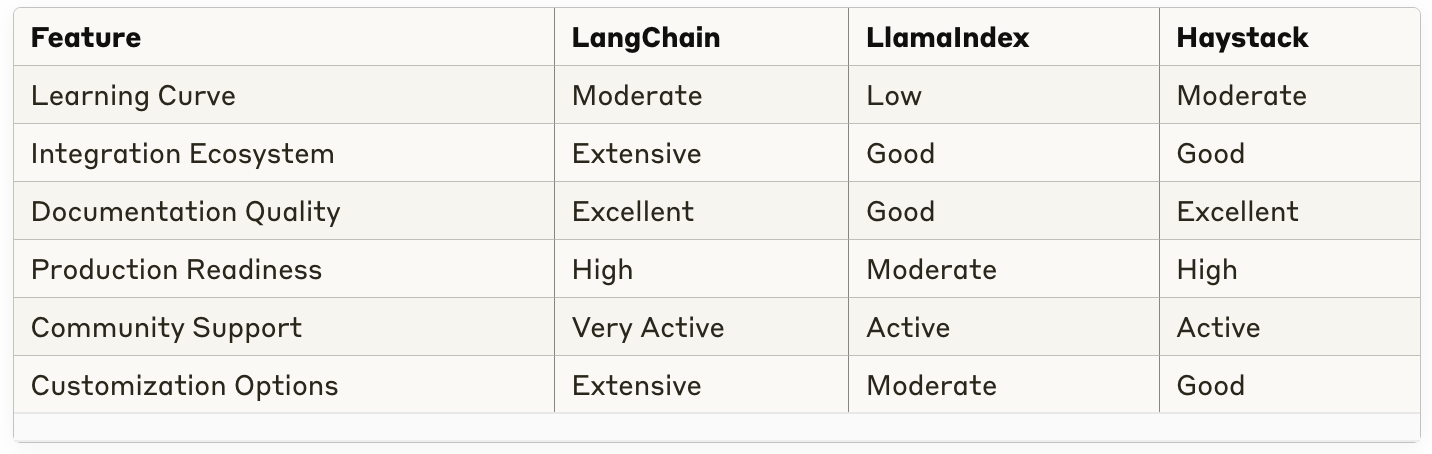

When building RAG applications, two critical choices are the orchestration framework and vector storage solution. Here's our analysis of the top options:

RAG Framework Comparison

We chose LangChain for its extensive integration ecosystem and production-ready features.

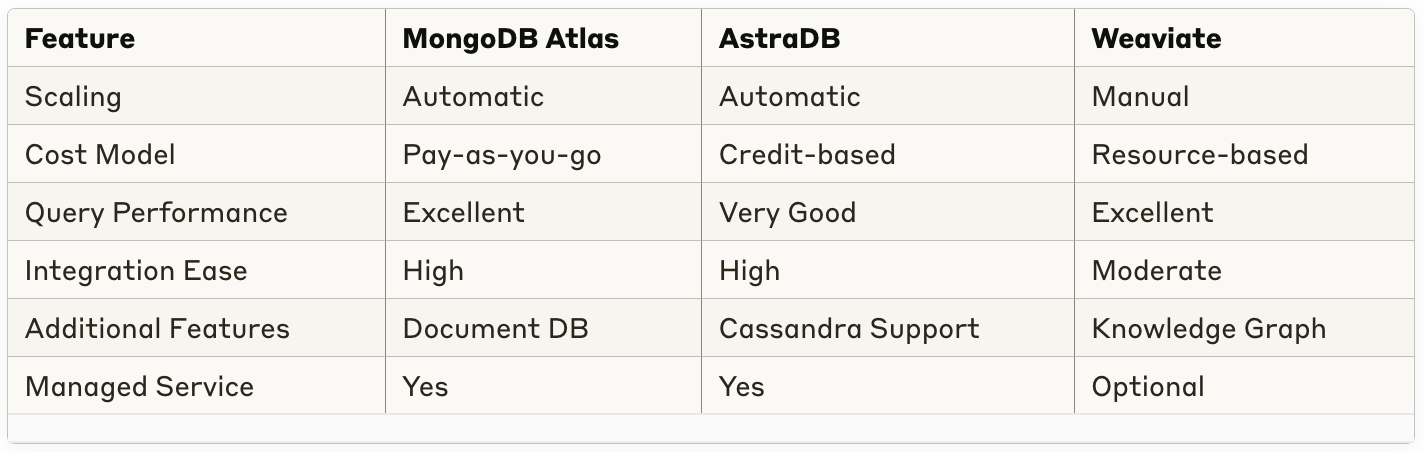

Vector Database Comparison

We selected MongoDB Atlas Vector Search for its:

Seamless LangChain integration

Familiar MongoDB query syntax

Strong performance at scale

Cost-effective operation

Practical Implementation

Here's a high-level look at how these components work together:

from langchain.vectorstores import MongoDBAtlasVectorSearch

from langchain.embeddings import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.document_loaders import DirectoryLoader

# 1. Load and process documents

loader = DirectoryLoader('path/to/documents', glob="**/*.pdf")

documents = loader.load()

# 2. Split text into chunks

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=200

)

splits = text_splitter.split_documents(documents)

# 3. Configure vector storage

vector_store = MongoDBAtlasVectorSearch(

embedding=OpenAIEmbeddings(),

collection=mongodb_collection,

index_name="default"

)

# 4. Store document embeddings

vector_store.add_documents(splits)Best Practices and Optimization

From our production experience, here are key considerations for building effective RAG systems:

Performance and Cost Optimization

Implement efficient document chunking strategies

Use batch processing for large document sets

Cache frequently accessed embeddings

Monitor and optimize embedding generation costs

Quality and Maintenance

Regularly update your knowledge base

Implement automated testing for retrieval accuracy

Monitor system performance and response quality

Maintain precise version control across components

Additional Resources

To dive deeper into RAG implementation:

Conclusion

RAG technology represents a significant advancement in making AI applications more accurate and contextually aware. While implementation requires careful consideration of tools and strategies, the benefits – including improved accuracy, reduced hallucinations, and better use of organizational knowledge – make it a worthwhile investment.

The key to success lies in starting simple, focusing on your specific use case, and gradually optimizing based on real-world usage patterns. Have you implemented RAG in your organization? I'd love to hear about your experiences in the comments below.