Context Is King: How GPT-5 and GPT-OSS Help Turn Prompts into Prescriptions

Stop asking questions. Start writing protocols that execute.

Forget bigger models—context engineering is what makes AI actually useful. GPT-5 brings 400K tokens and parallel tool calling. GPT-OSS runs the same reasoning patterns on your laptop. Together, they're not just answering questions—they're executing workflows, calling APIs, and turning prompts into prescriptions. Context engineering is the new pipette.

From Prompts to Pathways

Every time you feed a model a carefully structured prompt, you're doing context engineering: stacking prior knowledge, constraints, and goals into a coherent narrative.

As I wrote in my context engineering deep dive:

"Context enters an LLM in several ways, including prompts (e.g., user instructions), retrieval (e.g., documents), and tool calls (e.g., APIs). Just like RAM, the LLM context window has limited 'communication bandwidth' to handle these various sources of context."

The trick is treating the prompt as a dynamic protocol—not a static question. You're not asking "what is X?" You're saying "given these constraints, tools, and prior knowledge, execute this workflow."

The old way: Write a prompt, get text back. The new way: Write a protocol, get actions back.

GPT-5 and GPT-OSS both understand this shift. They don't just generate text—they orchestrate tools, manage state, and execute multi-step workflows. The difference is where they run and how much context they can handle.

GPT-5's Four Game-Changers

OpenAI's GPT-5 brings four capabilities that transform how we work:

1. 400K-token context—Enough to fit entire codebases, research papers, or patent libraries in one shot.

2. Native tool-calling at 96.7% accuracy—No more brittle regex hacks. Windsurf reports it has "half the tool calling error rate" of other models. It chains dozens of calls in parallel without losing track.

3. RLHF on domain-specific data—Fewer disclaimers, more actionable insights. GPT-5's responses are 80% less likely to contain errors when reasoning is enabled.

4. Free-form function calling—Simon Willison shows how it sends raw Python, SQL, or shell commands directly to tools. No JSON overhead.

This isn't incremental. Latent Space puts it best: "GPT-5 doesn't just use tools. It thinks with them."

Local Alchemy: Running Your Own Lab

You don't need a data center to start. With GPT-OSS and a modest GPU, you can spin up a local LLM that speaks your domain language, calls external tools (BLAST, ChemDraw, AlphaFold), and keeps your IP on-premises.

GPT-OSS is the hackable alternative—120B or 20B parameters, Apache 2.0 licensed, runs on your hardware:

Fast and private: Your data never leaves your machine

Hackable: Open weights mean you can fine-tune for specific domains

Compatible: Uses the same RLHF training as GPT-5—your prompts work on both

Affordable: One-time hardware cost vs. ongoing API fees

Right now it matches o4-mini performance. Not GPT-5 level, but good enough for many focused tasks. Think of it as your local lab assistant—not as smart as the professor (GPT-5), but always available and completely under your control.

Hugging Face hosts both models. The 120B needs an 80GB GPU. The 20B runs on 16GB consumer hardware. Setup is basically a one-liner if you use Ollama or LM Studio—a conda environment, a model file, and some Python glue.

How They Work Together

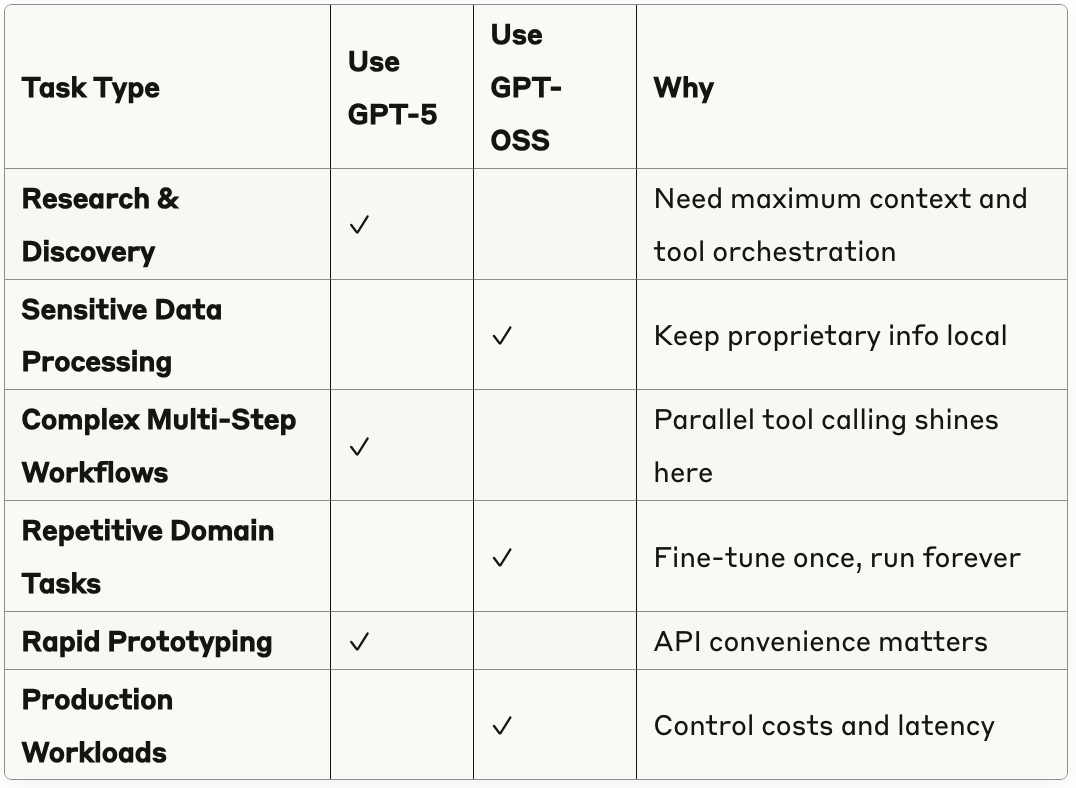

Here's the real insight: You don't choose between them. You use both.

Example: A biotech startup uses GPT-5 to explore drug interactions across 50 research papers (massive context needed), then deploys GPT-OSS locally to run daily compound screening (sensitive IP, repetitive task).

Context engineering is the new pipette—master it, and you can switch between models like switching between different tools in the lab.

Real Templates That Work on Both

These PromptPilot templates showcase how dynamic protocols work in practice:

Template 1: Scientific Analysis Framework

Transforms the model into a Chief Strategy Officer evaluating presentations. It identifies weaknesses, blind spots, and strategic gaps. The structured analysis forces rigorous thinking.

Use GPT-5 when: Analyzing complex multi-stakeholder proposals with lots of context. Use GPT-OSS when: Running routine reviews on internal documents.

Template 2: Mental Model Synthesis

Applies 20 mental models simultaneously—first principles, inversion, network effects. Analyzes problems from multiple perspectives, finding patterns and contradictions.

Use GPT-5 when: You need parallel processing of complex interconnected systems. Use GPT-OSS when: Running established analytical patterns on focused problems.

Template 3: Expert Panel Orchestration

Creates panels of domain experts who maintain distinct perspectives while building on each other's insights. Perfect for interdisciplinary problems.

Use GPT-5 when: Simulating real-time expert debate with tool access. Use GPT-OSS when: Running pre-defined expert protocols locally.

What Each Model Does Best

Based on benchmarks and developer experiences:

GPT-5 excels at:

Orchestrating 10+ tools in parallel

Maintaining context across 100+ page documents

Complex reasoning with 74.9% accuracy on SWE-bench

Real-time API integration and web searching

Handling ambiguous, open-ended research questions

GPT-OSS excels at:

Running air-gapped on sensitive data

Domain-specific tasks after fine-tuning

Predictable latency for production systems

Cost-effective batch processing

Customizable for specialized workflows

The key: They use the same training approach (RLHF), so switching between them is seamless. Your dynamic protocols work on both.

Start Today: Three Ways to Begin

1. For Researchers

Use GPT-5 to synthesize literature across your field. Feed it 20 papers and ask for contradictions, gaps, and unexplored connections. Then use GPT-OSS locally to run daily literature monitoring on your specific niche.

2. For Engineers

Prototype with GPT-5's parallel tool calling to integrate your entire stack. Once you identify the critical path, deploy GPT-OSS to handle routine automation without API costs.

3. For Data Scientists

Let GPT-5 explore your dataset with unlimited context—every column, every correlation. Then train GPT-OSS on your specific analysis patterns for rapid iteration.

The unlock isn't choosing the right model. It's learning to engineer context that turns prompts into actions.

Why This Changes Everything

We're moving from Q&A to execution. Models don't just answer—they do.

With proper context engineering:

A prompt becomes a protocol

A question becomes a workflow

A description becomes a prescription

GPT-5 gives you the power to explore any problem space. GPT-OSS gives you the control to deploy solutions. Together, they cover the full spectrum from research to production.

This is thinking differently than we've ever had to before. Not "what should I ask?" but "what should I have it do?"

Bottom line: Context engineering is the new pipette. Master it locally with GPT-OSS today, and when you need the big guns, GPT-5 is there. You'll swap between models like tools in a lab—not rebuild your entire workflow.

Resources to Go Deeper

Core Concepts:

Technical Documentation:

Implementation Guides:

Analysis & Reviews:

Tools to Try:

PromptPilot - Template management

Ollama - Run GPT-OSS locally

LM Studio - GUI for local models

Azure AI Foundry - Enterprise orchestration

The biggest unlock you can make? Start using these in your daily workflows today. See what fits. This is different thinking than we've done before—embrace it.