OpenAI's GPT-OSS

The Open-Source Gambit That's Reshaping the AI Landscape

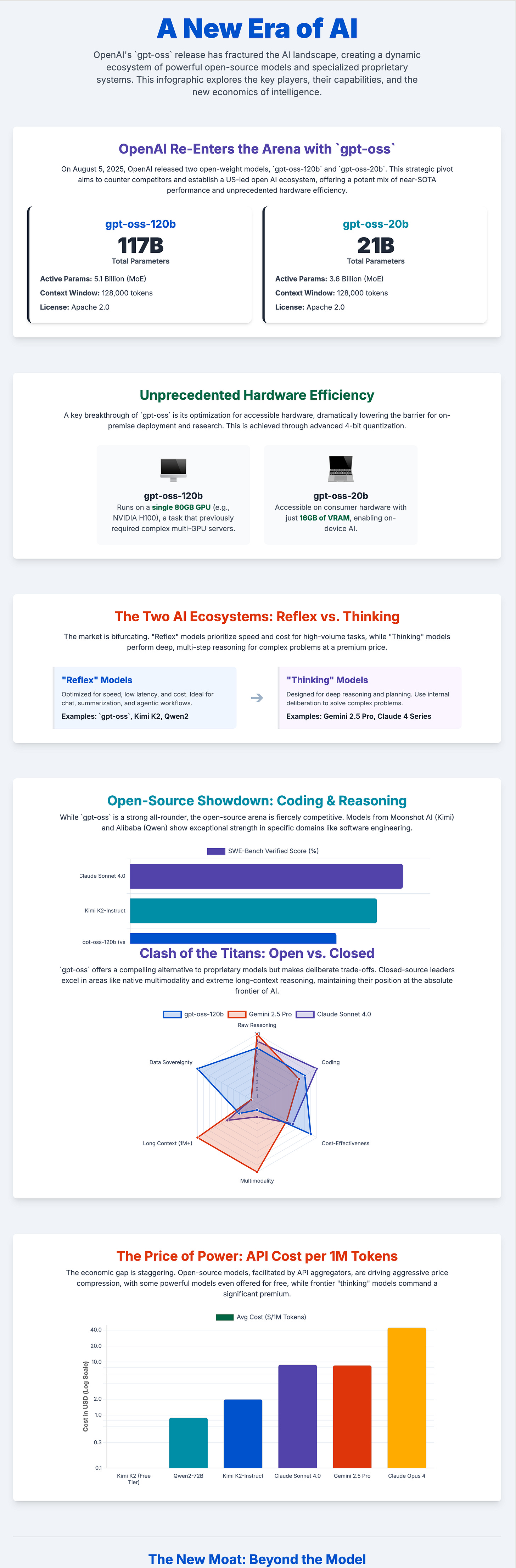

On August 5, 2025, OpenAI did something unexpected.

After years of keeping their most powerful models behind API paywalls, they released two state-of-the-art language models completely open-source under the Apache 2.0 license: gpt-oss-120b and gpt-oss-20b.

This isn't just another model release. It's a strategic repositioning with technical, economic, and political implications that will affect how we build and deploy AI systems for years to come.

The Technical Marvel: What Makes GPT-OSS Special

Architecture That Defies Expectations

The gpt-oss models showcase what's possible when you optimize for efficiency rather than raw scale. Using a Mixture-of-Experts (MoE) design, the flagship gpt-oss-120b contains 117 billion total parameters but only activates 5.1 billion for each token it processes, routing computation through just 4 of its 128 specialized "expert" networks.

This selective activation is why a 120-billion parameter model can run on a single 80GB GPU. Two years ago, this would have required a small cluster.

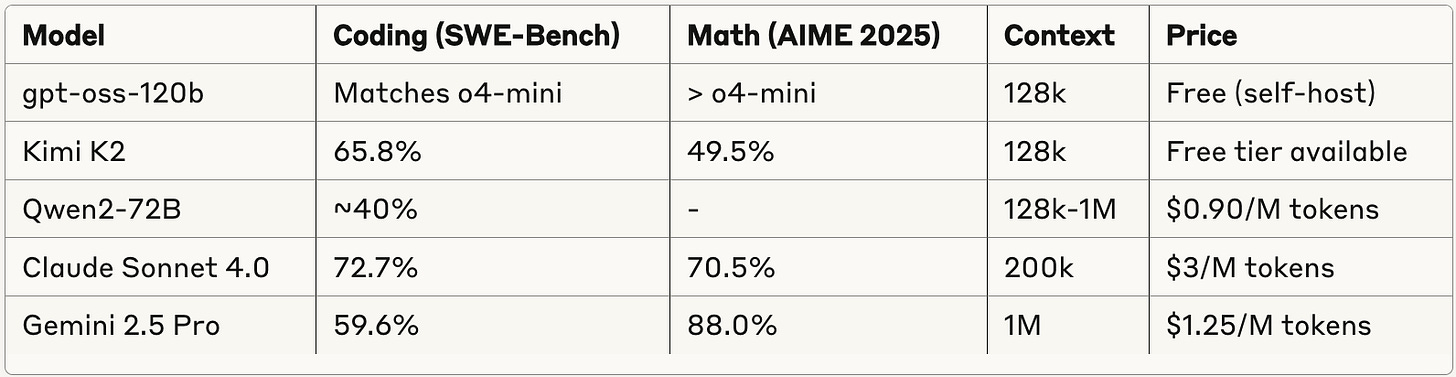

Key Specifications:

Context Window: 128,000 tokens (enough to process entire books)

Memory Requirements: 80GB for the 120b model, just 16GB for the 20b

Architecture: Alternating dense and sparse attention patterns

Licensing: Apache 2.0 (truly open, commercially usable)

Performance That Challenges the Giants

OpenAI made an interesting choice here: they released models that compete directly with their own commercial offerings.

The gpt-oss-120b achieves near-parity with OpenAI's o4-mini on core reasoning tasks. More surprisingly, it outperforms OpenAI's o3-mini on several challenging benchmarks:

Mathematics: Better scores on AIME 2024 & 2025 competitions

Coding: Matches GPT-4 level on Codeforces problems

Healthcare: Strong results on HealthBench queries

The smaller gpt-oss-20b, compact enough to run on a high-end laptop, still matches or beats o3-mini across these same benchmarks.

The main limitation? These models are text-only and primarily English-focused. No images, no audio, no video. Pure language understanding and generation.

The Safety Paradox

OpenAI took an unusual approach to safety. While the models underwent extensive training to refuse harmful requests, they deliberately left the Chain-of-Thought reasoning unsupervised.

This transparency lets researchers monitor the model's "thinking" for bias or concerning patterns, but it also means developers need to be careful. Raw reasoning outputs may contain hallucinations or ignore system instructions, so they shouldn't be shown directly to end users.

The $500,000 Red Teaming Challenge turns this potential weakness into a strength by incentivizing the global research community to find and report vulnerabilities.

The Open-Source Arena: David Takes on Goliath

Kimi K2: The Trillion-Parameter Titan

Moonshot AI's Kimi K2 represents one extreme of open-source AI: massive scale. With 1 trillion total parameters (32 billion active per token), it's an engineering achievement in its own right.

Kimi K2's advantages:

Coding prowess: 65.8% on SWE-Bench Verified

Creative writing: Particularly good at narrative and dialogue

Price disruption: Available completely free through OpenRouter's promotional tier

The downside? You'll need serious hardware to run it locally.

Qwen2: The Multilingual Swiss Army Knife

Alibaba's Qwen2 family takes a platform approach. From tiny 0.5B models to the 72B flagship, plus specialized variants, Qwen2 covers most use cases:

Language coverage: 30+ languages with native proficiency

Extreme contexts: Qwen2.5-1M handles 1 million token windows

Vision capabilities: Qwen2.5-VL understands images

Accessibility: The 7B model punches well above its weight class

GLM-4: The Agentic Specialist

Zhipu AI's GLM-4 focuses on autonomous capabilities. The GLM-4 All Tools variant doesn't just follow instructions; it decides when and how to use web browsers, Python interpreters, and other tools to complete complex tasks.

With strong bilingual (Chinese/English) support and practical problem-solving abilities, GLM-4 represents sophisticated open-source agent development.

The Competitive Reality

Here's the current performance landscape:

The performance gap between open and closed models has become surprisingly narrow.

The Economics Revolution: When Premium Becomes Commodity

The pricing trends reveal an interesting story. GPT-4.5, before its deprecation, cost $150 per million output tokens. Today, Kimi K2, a model with comparable capabilities in many areas, is available for free.

This isn't sustainable pricing. It's strategic pricing designed to capture developer mindshare and establish ecosystems.

API aggregators like OpenRouter and Together.AI have accelerated this trend. By providing unified access to dozens of models, they've turned AI capabilities into interchangeable utilities. Developers can now build "mixture of agents" systems that route tasks to the most cost-effective model for each specific need.

The result? Raw model intelligence is becoming less of a competitive differentiator.

Strategic Implications: The Great Unbundling

OpenAI's Calculated Gambit

This release isn't about generosity. It's about influence.

OpenAI explicitly frames gpt-oss as building "US-led rails" for global AI infrastructure, a direct response to the growing influence of Chinese open-source models. By providing a powerful, truly open alternative, they're attempting to establish Western technical standards and safety norms as the global default.

The strategy makes sense: use open-source to capture the developer ecosystem, then monetize through premium "thinking" models that remain proprietary.

The Bifurcation of Intelligence

We're seeing the emergence of two distinct model categories:

"Reflex" Models (including gpt-oss): Fast, efficient, reactive. Perfect for high-volume tasks.

"Thinking" Models (Gemini 2.5 Pro, Claude 4): Deep, deliberative, expensive. Essential for complex reasoning.

This split reflects how AI systems are actually being deployed in practice.

Where Competitive Advantage Lives Now

With model weights becoming increasingly accessible, the new differentiators are:

Proprietary data and real-time information access

Deep ecosystem integration (Microsoft 365, Google Workspace)

Trust, reliability, and user experience

Specialized fine-tuning and domain expertise

Societal Implications: Pandora's Box Opens

The Democratization Dividend

Every developer now has access to GPT-4 level intelligence at their fingertips.

A hospital in rural Kenya can deploy a medical AI assistant without sending patient data overseas. A startup in Eastern Europe can build sophisticated AI products without burning through API costs. Educational institutions can provide personalized tutoring without the privacy concerns of cloud services.

These aren't hypothetical scenarios. They're happening right now.

The Safety Question

OpenAI's approach (releasing powerful models with safety measures but leaving ultimate responsibility to users) represents a bet that transparency and community vigilance can work effectively.

The $500,000 Red Teaming Challenge transforms safety from a corporate concern into a collective responsibility. Whether this approach proves effective will influence how future models are released.

Looking Forward: The New Landscape

The dynamics have fundamentally shifted. We're entering an era where:

Domain-specific fine-tuned models will become increasingly common

Hybrid deployments (local + cloud) will be standard practice

Value creation shifts from model training to application development

The foundational model startup era is likely ending (capital requirements are prohibitive)

For developers, the message is straightforward: the tools to build sophisticated AI applications are now free and open. The question is what you'll create with them.

For enterprises, the calculation has changed: you can now own your AI stack entirely, with performance that was exclusive to tech giants just months ago.

For society, we face a practical question: in a world where powerful AI is widely accessible, how do we encourage responsible use?

OpenAI hasn't just released two models. They've changed the rules of engagement.

The future belongs not to whoever has the best model, but to whoever builds the most useful applications around these increasingly accessible capabilities. Whether you're a developer, enterprise leader, or policymaker, the time to engage with open AI isn't coming.

It's here.