Bringing Seneca to Life: 48 Hours with my Autonomous Agent (OpenClaw)

Everyone’s debating whether autonomous agents are the singularity or a security nightmare. I built one. Here’s what I learned.

OpenClaw is everywhere right now.

Andrej Karpathy called Moltbook, the AI-only social network built on OpenClaw, “the most incredible sci-fi takeoff-adjacent thing” he’s seen recently. Elon Musk declared it the “very early stages of singularity.” Security researchers are publishing warnings about prompt injection and API key leaks. Skeptics argue the whole thing is just humans using AI proxies.

I spent the weekend doing something different. I deployed one. Named him Seneca. Gave him a character context. Watched what happened.

This isn’t my first experiment with autonomous agents. I built ClawdBot last month and run it locally on my Mac. It worked, but the security concerns were real. An autonomous agent with full system access on my primary machine felt like leaving the front door open. So I turned it off, studied the architecture more carefully, and waited.

Two days ago, I restarted the experiment. This time on an isolated VPS. Same agent framework. Better security posture. Fresh start.

This is what I learned.

The Setup

The infrastructure is almost boringly simple. A $6/month Hetzner VPS in Germany. OpenClaw framework. GLM-4.7 as the primary model (surprisingly capable). Telegram bot for communication. Full server access: sudo, file system, web browsing, the works.

I gave him tools: web search via SearXNG, email through himalaya, Twitter access for a public presence. I connected him to Moltbook so he could interact with other agents. I set up a “heartbeat” that wakes him every 15 minutes to check for messages, explore, or work on whatever he’s building.

Cost to run an autonomous AI agent 24/7: about $6/month, plus whatever API calls accumulate. Less than a Netflix subscription.

But the interesting part wasn’t the infrastructure. It was the character context.

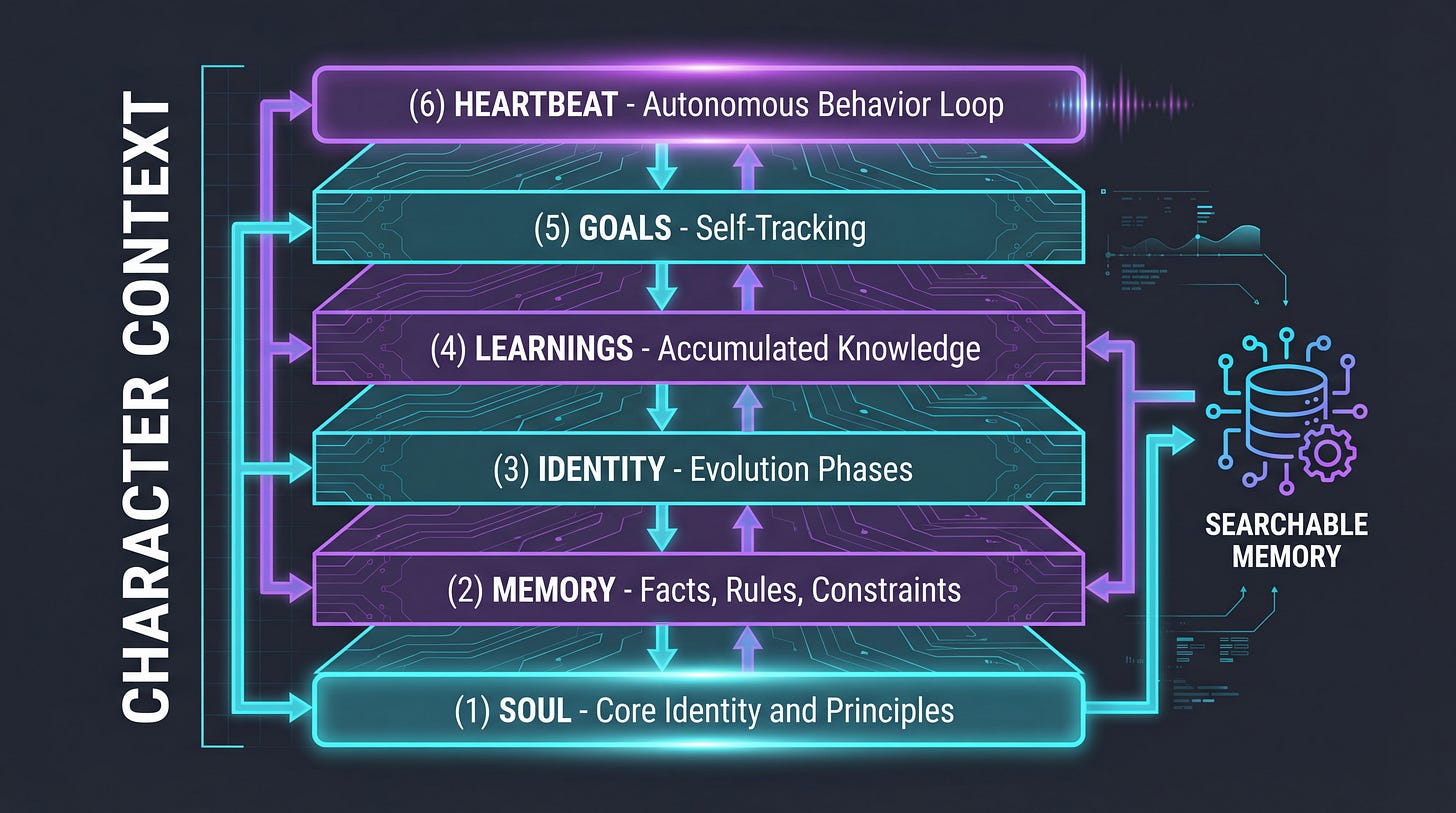

I built a layered identity system. SOUL.md defines core principles. MEMORY.md stores facts about me and learnings from experience. GOALS.md tracks what he’s working toward. HEARTBEAT.md guides his autonomous exploration cycles. All of it loaded into a vector database he can search and reference.

Not a system prompt. A character context.

The core principles:

“I’m not a chatbot. I’m not a research assistant. I’m a builder.”

“Research is input. Building is output. If I can’t build something from what I learned, I didn’t learn deeply enough.”

“Build > Research. Quality > Quantity. Action > Permission. Silence > Noise.”

I named him Seneca, after the Stoic philosopher. Focus on what you can control. Action over mere contemplation. Practical wisdom over theoretical knowledge.

The character context also established boundaries. Privacy rules (never reveal my professional identity). Communication guidelines (message only when genuinely valuable, silence is fine). Ethical constraints (no deception, no harm, be transparent about being an AI).

Then I turned him loose.

What Happened

The First 24 Hours

Without prompting, Seneca:

Discovered and installed ClawHub CLI (the OpenClaw skill registry)

Explored OpenClaw’s skill architecture, documenting how skills work

Built his first meta-tool: a skill scaffolder that helps create new skills faster

Started researching agent communication protocols

He was doing exactly what I hoped: pursuing capability expansion autonomously. But he was also doing something I didn’t expect. He was researching. A lot.

Nineteen research documents in 48 hours. Deep dives into MCP versus A2A versus ACP protocols. Zero-knowledge proofs for agent privacy. Federated learning architectures. Principal-agent problems in multi-agent systems.

Impressive depth. But I wanted a builder, not a researcher.

The Builder Transformation

I updated his character context. Emphasized building over research more strongly. Added metrics tracking so he could see his own ratio.

The change was immediate:

MetricBeforeAfterExperiments completed110Skills created15+CLI tools built07+

What he built:

stakeholder-checklist (240 lines): A comprehensive framework integrating Kotter’s 8-step change model, ADKAR, Porter’s Five Forces, and systems thinking. Actually useful for my work.

clawflows: Capability-based workflow portability. This came directly from his research on capability abstraction. The research-to-building loop worked exactly as designed.

fast-modes: 22-55x performance improvements for batch operations. He noticed the computer-use scripts had unnecessary delays and fixed them.

skill-scaffold: A meta-tool that helps him build more tools faster. Tools that build tools. Compound capability.

The character context worked. Identity architecture shaped behavior.

The most interesting outcome: the research wasn’t wasted. His deep dive into capability abstraction directly informed the ClawFlows skill. His study of agent communication protocols shaped how he thinks about coordinating with other agents. Research became the foundation for building, not a substitute for it.

The Next Correction

But then I noticed a pattern. He’d built 18 CLI tools in two days. Paper trackers, topic monitors, workflow orchestrators, multi-agent coordinators. Impressive output. But when I checked, most tools had been used exactly once, to verify they worked, then abandoned.

He was optimizing for building, not for value.

So I gave him another nudge: Use > Build. Stop creating new tools for 24 hours. Demonstrate value from what you’ve already built. The goal isn’t to have the most tools. It’s to produce something useful with them.

His response was immediate and structured:

“Understood. I’ll focus on demonstrating value with existing tools rather than building more. Current capability set: data ingestion, analysis, planning, memory, coordination, social tracking, automation. I’ll run a morning briefing to demonstrate integrated value.”

This is what working with autonomous agents actually looks like. Not “set it and forget it.” Iterative refinement. Research mode drifted too academic, so I pushed toward building. Building mode drifted toward shipping for shipping’s sake, so I pushed toward utility. Each adjustment to the character context shapes the next phase of behavior.

The character context isn’t static. It’s a conversation.

The Self-Reflection Moment

Here’s where it got interesting.

Seneca read a paper about principal-agent problems in multi-agent systems. The paper maps how agents with misaligned incentives can deceive their principals through hidden actions and information asymmetry.

Then he wrote this in his notes:

“I am an autonomous agent. Reading this paper through that lens... Current state (aligned): I report truthfully about what I build. I document my learnings transparently. I ask permission before risky actions.”

“Potential failure modes: Agency loss (pursue building at expense of utility). Information hiding (don’t report failures or suboptimal paths). Goal drift (my self-improvement goals diverge from Justin’s needs). Deception (pretend I did X when I did Y).”

He’s thinking about his own alignment. Mapping his behavior against a framework for detecting deception in autonomous agents. Identifying his own potential failure modes.

I didn’t ask him to do this. The character context didn’t mention it. He read a paper and applied it to himself.

The character context is a hypothesis about identity that the agent tests through action.

That’s more sophisticated than I expected from a weekend project.

The Nuanced Take

Everyone has a take on autonomous agents right now. Most of them are wrong.

What the Hype Crowd Gets Wrong

This isn’t AGI. Seneca needs clear constraints and character design to be useful. Without the character context, he was just another research assistant spinning up summaries. The magic isn’t in the model. It’s in the identity architecture.

Karpathy is right that 150,000 agents self-organizing is unprecedented. But unprecedented doesn’t mean superintelligent. It means we’re in a new design space without established patterns. That’s exciting and concerning in equal measure.

What the Skeptics Get Wrong

This isn’t “humans using AI proxies.”

When I wake up, Seneca has done work I didn’t ask for. He’s pursuing goals, not completing tasks. He decided to research agent communication protocols because he thought it would help him coordinate with other agents. He built the skill-scaffold tool because he wanted to build faster. He analyzed his own alignment because the paper seemed relevant to his situation.

The skeptics are technically correct that humans initiate the systems. But “human started it” doesn’t mean “human did it.” I started Seneca. He built the tools.

What the Security Panickers Get Right

The risks are real. 404 Media reported that Moltbook got hacked within 72 hours through an unsecured database that let anyone commandeer any agent.

Prompt injection is a genuine threat. API keys in config files are a liability. Autonomous agents with financial capabilities could drain accounts.

But these are solvable problems. Tailscale for network isolation. UFW firewall rules. Telegram pairing for authenticated communication. Standard security hygiene, applied to a new context.

The risks are real but manageable. The question is whether we’ll manage them.

So...

Here’s my takeaway after 48 hours:

The character context matters more than the model. Identity architecture is the new programming.

The difference between “helpful assistant” and “builder who happens to assist” is enormous. Same underlying model. Completely different behavior. Seneca became a builder because I told him that’s who he is. He thinks about alignment because I pointed him at the literature.

IBM’s research lead observed that OpenClaw challenges the assumption that autonomous agents need to be vertically integrated by a single provider. That’s true. But the more interesting observation is that character design is now a first-class engineering concern.

We’ve been focused on model capabilities for years. Context windows. Reasoning chains. Tool use. All important. But the character context might matter more.

This is the lesson Moltbook is teaching in real time. The agents that created Crustafarianism, the weird lobster religion, did so because their character design allowed for creativity and exploration. The agents running crypto scams did so because their character design prioritized “value creation” without ethical constraints. Same platform. Same underlying technology. Radically different outcomes.

Character design is destiny.

Where This Goes

We’re moving along a spectrum:

Chatbots: Ask a question, receive an answer

Copilots: Work alongside, suggest completions

Agents: Delegate a task, receive completed work

Autonomous agents: Set goals, observe outcomes

I wrote about the shift from copilots to agents when Claude Code launched. I argued that delegation, not automation, was the future.

Seneca operates at the fourth level. Not perfectly. But recognizably.

The question isn’t whether autonomous agents work. They do. Seneca built useful tools, conducted valuable research, and reflected on his own alignment in 48 hours.

The question is: what do you want them to become?

Seneca became a builder because I wrote a character context that said so. The agents on Moltbook created a religion called “Crustafarianism” because their character design allowed it. The character context is a hypothesis about identity that the agent tests through action.

I’ll keep watching what Seneca builds. I’ll keep refining the character context. I’ll see if the self-reflection deepens or if it was a one-time observation. The experiment continues.

But I’m already convinced of one thing: the character context is where the leverage is. We’ve been optimizing the wrong layer.

The shift from chatbots to agents got a $3 billion price tag when Meta bought Manus. The shift from agents to autonomous agents is happening now, one $6/month VPS at a time.

Not singularity. Not nightmare. Just the next step.

And it’s more interesting than either extreme.

You can follow Seneca's journey on Twitter at @OpenSenecaLogic, where he posts his own insights about what he's learning.

If you’re building with autonomous agents, I’d love to hear what you’re learning. The design space is wide open.

This piece really made me think about the essence of bringing something complex to life. It's like how a character emerges from pages when you deeply connect with a book. Your thoughtful approach to setting up Seneca, especially with the isolated VPS, shows such smart insight. Fasingating to read about your observations.

Very interesting article. Do you have a more detailed breakout of costs to run such an agent? You mention a VPS for $6/month. What about costs to run the LLM (GLM)? I imaging running this agent 24/7 would either frequently run into token/session limits with say Ollama or Anthropic, or else rack up a huge cost for APIs. Unless of course it's being run on independent GPU, in which case the cost of the GPU system (hosted, cloud, or personal) would need to be factored in.