Unlocking Your Second Brain

Building a Custom AI Chief of Staff

A leap beyond generic AI, into the realm of personalized knowledge and power.

Imagine having an AI assistant with a perfect memory of everything you've ever learned, thought, or created. An intelligent partner who lives right where you work, ready to answer nuanced questions, draft ideas based on your past insights, and recall critical information instantly. This isn't science fiction; it's the power of a bespoke AI, tailored to your unique knowledge landscape.

Like many in the world of business, data science, and AI exploration, I've been captivated by the potential of Large Language Models (LLMs). Tools like ChatGPT and the flexibility offered by platforms like OpenRouter are transformative. Yet, a fundamental element often feels missing: personal context. These powerful AI brains are brilliant generalists, but they lack an intimate understanding of your specific projects, the years of notes you've diligently cultivated, and the unique insights that reside within your personal knowledge vault.

"The key to unlocking true AI partnership lies not just in the model's capabilities, but in its understanding of your world."

I found myself constantly context-switching – jumping between my note-taking application, the incredible Obsidian, and my coding environment, Visual Studio Code. It was a productivity bottleneck that hindered the seamless flow of ideas. That's when I embarked on a mission: to fuse my carefully curated knowledge base directly with the intelligence of a modern LLM, all within the familiar confines of VS Code.

This isn't just about answering simple queries; it's about creating "Seneca," my personal AI Chief of Staff. An always-available partner who has effectively "read" my entire digital life, ready to assist with the complexity of strategic thinking and data-driven decision-making.

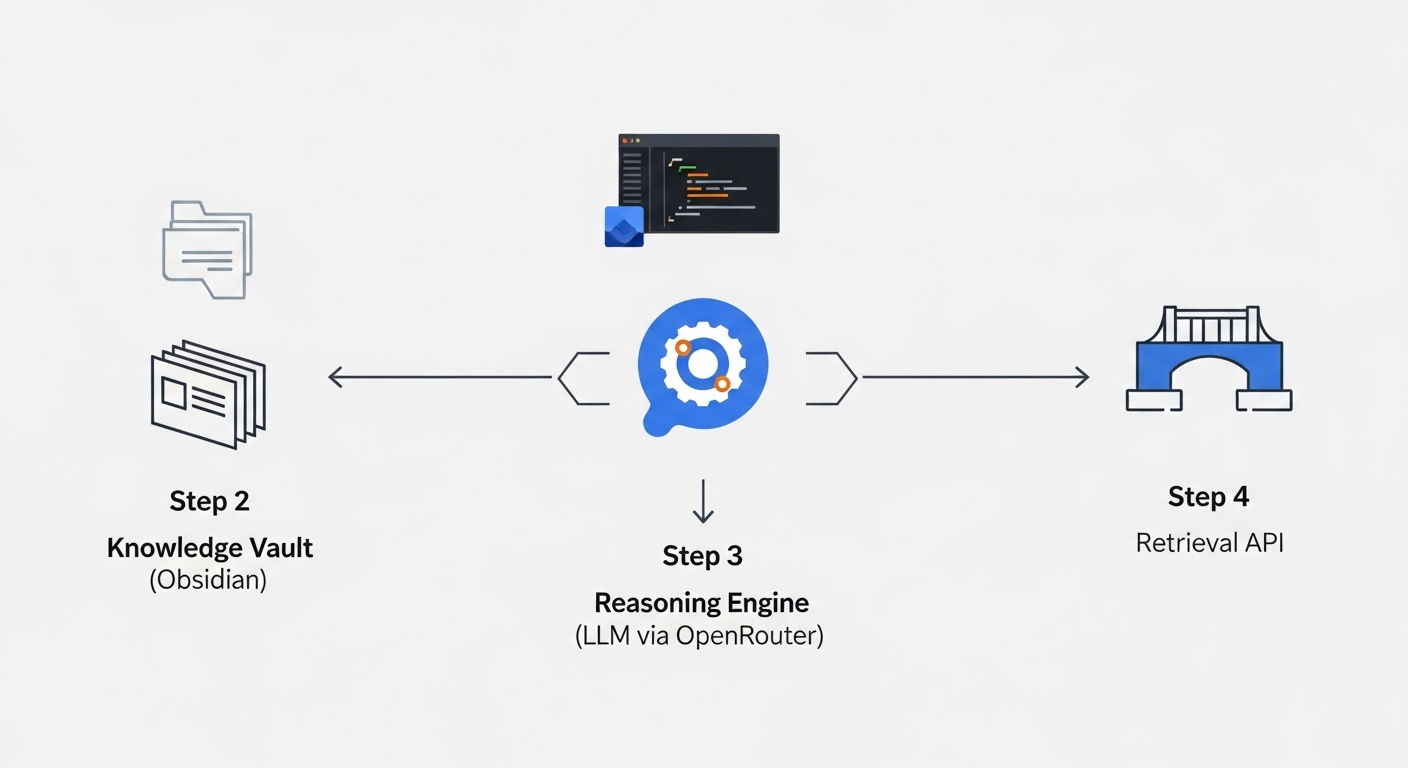

The Blueprint: Four Pillars of a Personalized AI

To build this "second brain," I architected a system based on the principles of Retrieval-Augmented Generation (RAG), with four interconnected components.

1. The Sacred Vault (Obsidian): This is the bedrock, your meticulously organized collection of Markdown notes. Choosing a local, file-based system like Obsidian ensures privacy, longevity, and platform independence. It's your long-term, human-readable memory.

2. The Integrated Workspace (Roo Code in VS Code): This is where the magic unfolds. Roo Code, the AI assistant I use within VS Code, acts as the central interface. Its ability to handle various LLMs and support custom commands makes it the ideal command center for "Seneca."

3. The Intelligent Brain (OpenRouter's LLM Ecosystem): This provides the raw reasoning power. Roo Code’s seamless integration with OpenRouter is critical. It allows me to tap into a vast array of cutting-edge LLMs (from OpenAI, Anthropic, Google, and more) without being tied to a single vendor.

4. The Knowledge Retrieval Bridge (My Custom RAG API): This is the secret sauce. It’s a locally hosted Python API designed for one purpose: to intelligently search your Obsidian vault and retrieve the most relevant information based on a given query.

Building the Bridge: From Notes to Intelligent Assistance

The process, while requiring a touch of technical know-how, is surprisingly accessible. The process, while requiring a touch of technical know-how, is surprisingly accessible. For those who want to dive straight into the code, I’ve made the entire project open-source and available on GitHub: BioInfo/vault-rag.

Here’s a simplified overview of how I built this retrieval bridge:

Step 1: Indexing Your Intellectual Capital: The first step is to make your vault searchable by meaning, not just keywords. This involves a process called "embedding." I used LlamaIndex, a powerful Python framework, to orchestrate reading my Markdown files, creating semantic embeddings, and storing them in a local vector database called ChromaDB. Think of this as creating a highly efficient index of your thoughts.

Step 2: Creating the Intelligent Concierge (The API): Next, I built a lightweight API using FastAPI, a modern web framework for Python. This API has a single, crucial endpoint:

/retrieve. When it receives a question, it queries the ChromaDB database and returns the most relevant text snippets.Step 3: The Seamless Integration (Roo Code's Slash Command): The final piece is connecting this retrieval power to my workflow. I configured a custom

/vaultslash command within Roo Code. Now, when I type/vaultfollowed by my question, Roo Code triggers the API, fetches the relevant context, and seamlessly injects it into the prompt before "Seneca" formulates an answer.

This happens in milliseconds. The result? "Seneca," my AI Chief of Staff, answers my questions with a deep understanding of my personal knowledge, drawing directly from my own "second brain."

Why This Matters: The Power of Personalized AI

This approach transcends the limitations of generic AI assistants, offering significant advantages for business leaders, data scientists, and anyone eager to leverage AI more effectively:

Unprecedented Flexibility: By separating the knowledge retrieval from the reasoning engine, I can choose the best LLM for any given task via OpenRouter without needing to re-index my notes or change my core workflow.

Data Sovereignty and Privacy: My entire knowledge base remains on my local machine. Only the precise, relevant context needed to answer a specific question is temporarily shared with the LLM through Roo Code.

A Truly Intelligent Partner: "Seneca" isn't just a chatbot; it has access to my accumulated knowledge, allowing for nuanced, context-aware responses and a deeper level of assistance.

Compounding Intellectual Value: Every new note I add to my Obsidian vault makes "Seneca" more knowledgeable and valuable over time. My personal knowledge base becomes a dynamic, AI-enhanced asset.

"Imagine asking your AI assistant to draft a strategy document, and it does so not just with general knowledge, but by referencing your past strategic thinking, project analyses, and relevant meeting notes."

The Journey of a Thousand Notes Begins with a Few Lines of Code

Building this personalized AI Chief of Staff isn't about becoming a full-stack developer overnight. It’s about recognizing the power of combining readily available tools with a bit of "elbow grease" to solve a real problem – the lack of personal context in AI assistants.

The era of truly personalized AI is dawning. By taking a hands-on approach, you can build systems that are deeply integrated with your unique knowledge and workflows, unlocking new levels of productivity and insight. This journey takes me from scattered notes to a coherent, AI-powered partner and is a testament to the power of a little inspiration and the remarkable capabilities that are now within our reach.

Ready to start building your own "Seneca"? The blueprint is here. The potential is limitless.

For my fellow data scientists, developers, and the technically curious who are inspired to build their own system, I encourage you to explore the complete source code for my Retrieval Bridge. You can view it, fork it, and adapt it to your own needs on GitHub.

GitHub Repository: https://github.com/BioInfo/vault-rag