The Future of Personal Health Intelligence

What I learned building my own health AI before ChatGPT launched theirs

OpenAI just launched ChatGPT Health. 230 million people ask health questions on ChatGPT every week. That’s not a typo. 230 million. Weekly.

They built it with 260 physicians across 60 countries. It connects to Apple Health, medical records via b.well, wellness apps like MyFitnessPal. Encrypted isolation. Won’t train their models on your health data.

This is a big deal. Health AI just went mainstream.

My reaction: That’s awesome. I’ve been building toward the same destination for months, and I think what I built is pretty cool too.

I’m genuinely excited that people who don’t have access to the technology I do, or the 25 years I’ve spent interpreting large human health datasets, are going to be able to do this. ChatGPT Health brings personal health AI to the masses. That’s a win for everyone.

And for those who want to go deeper? There’s room for that too.

What ChatGPT Health Is (and Isn’t)

What OpenAI announced:

Apple Health integration

Medical records via b.well connectivity

Wellness apps (MyFitnessPal, Function, Weight Watchers)

260 physicians consulted over two years

Encrypted, isolated from main ChatGPT

Won’t be used to train models

What they’re clear about:

“Not intended for diagnosis or treatment”

LLMs predict likely responses, not correct ones

Prone to hallucinations

Not a replacement for medical care

This is responsible positioning. They’re building a tool for informed health conversations, not a diagnostic system. The TechCrunch coverage highlighted both the scale of demand and the careful guardrails.

The question I kept asking: what happens when you want to go deeper? When you want your genomics layered on your clinical data layered on seven years of wearable data? When you want to train a foundation model on your own sleep patterns?

ChatGPT Health is the on-ramp. What I built is what’s down the road.

OpenAI built this with 260 physicians. I built mine on 25 years interpreting large human health datasets and 18 months of building wicked fast with AI. Both valid. Both exciting.

The 15-Minute Problem

Your doctor sees you twice a year. Fifteen minutes each visit. Maybe thirty if you’re lucky.

They’re juggling 100+ other patients. They’re documenting in the EHR while you’re talking. They’re running behind schedule because someone before you needed more time.

You get lab results with a “normal” stamp and no context. Is your albumin at the high end of normal and declining? Low end and stable? Nobody tells you. Nobody has time to tell you.

Your Apple Watch, meanwhile, has been collecting data constantly for seven years:

1,014,608 heart rate measurements

45,897 sleep samples

2,400+ nights of tracked sleep

3,854 workouts with GPS routes

But it stays trapped in charts you never open. Graphs without interpretation. Data without insight.

The system isn’t designed to help you understand your health. It’s designed to process you efficiently.

This isn’t an indictment of doctors. They’re doing their best within constraints they didn’t create. But those constraints are real. And they leave a gap.

More and more people are taking health into their own hands. Not because they distrust doctors. Because they want to arrive at appointments prepared. Because they want to understand their own bodies. Because the data exists and nobody’s helping them interpret it.

The quantified self movement was the first wave. Fitbits and step counts. Interesting but shallow.

AI is the second wave. The ability to ask questions in natural language and get answers grounded in your actual data. “What affects my sleep?” “How has my resting heart rate trended since I started exercising?” “What’s unusual in my recent patterns?”

This is why 230 million people ask health questions on ChatGPT every week. The demand was always there. The tools are finally catching up.

Your doctor sees you fifteen minutes twice a year. Your Apple Watch sees you 24/7 for a decade. The data asymmetry is staggering.

What I Built

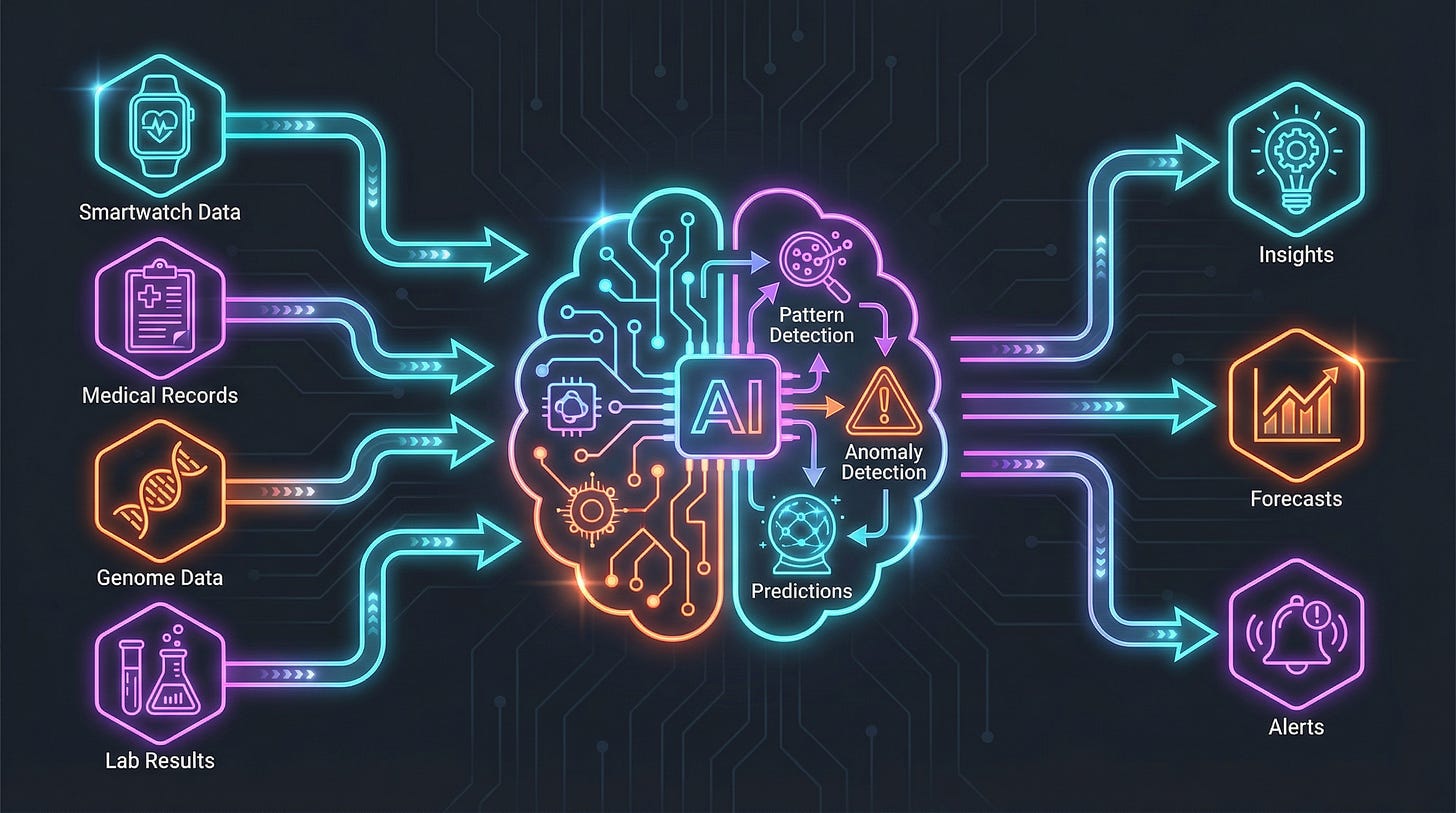

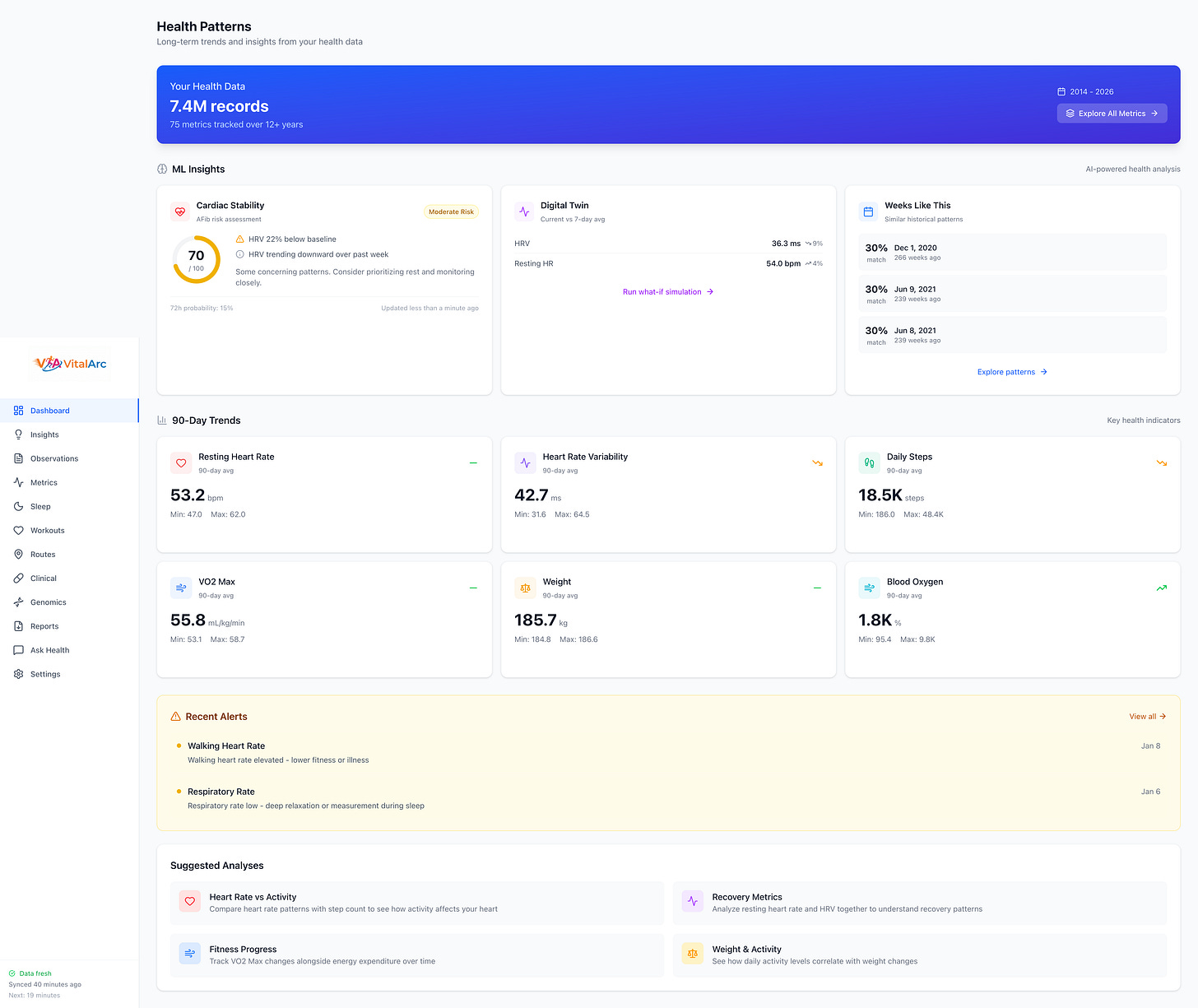

VitalArc is a personal health intelligence platform that transforms Apple Health exports into actionable insights.

The Scale:

Data TypeRecordsTimelineTotal Health Records6M+7 yearsHeart Rate Measurements1,014,608ContinuousSleep Samples45,8972,400+ nightsClinical Documents2,104Years of recordsECG Recordings69Since 2022Workout Routes2,023GPS trackedGenetic Variants600,000Whole genome

The Architecture:

DuckDB for 6M time-series records (columnar, fast)

SQLite for clinical data (FHIR documents)

LanceDB for semantic search (embeddings)

FastAPI backend with ML pipeline

React frontend with visualizations

Ollama for local LLM (or external if you prefer)

The Timeline: Built the core in days with Claude Code. Not months. Days.

Before AI coding assistants, this would have been a multi-month project. With Claude Code, I had a working dashboard within 48 hours. The foundation model took longer (more on that), but the core application? Days.

The Sleep Foundation Model

I read the SleepFM paper in Nature Medicine. Researchers at Stanford built a foundation model that predicts 130 diseases from one night of sleep data. C-index of 0.84 for all-cause mortality. 0.89 for Parkinson’s. Trained on 585,000 hours of clinical polysomnography.

One problem: polysomnography costs $1,000-3,000 per night. You go to a clinic. They wire you up. You sleep in a strange bed. You get maybe 1-2 nights of data.

I have 2,400 nights of sleep data from my Apple Watch. That’s 48-384x more temporal depth than any individual in a clinical study.

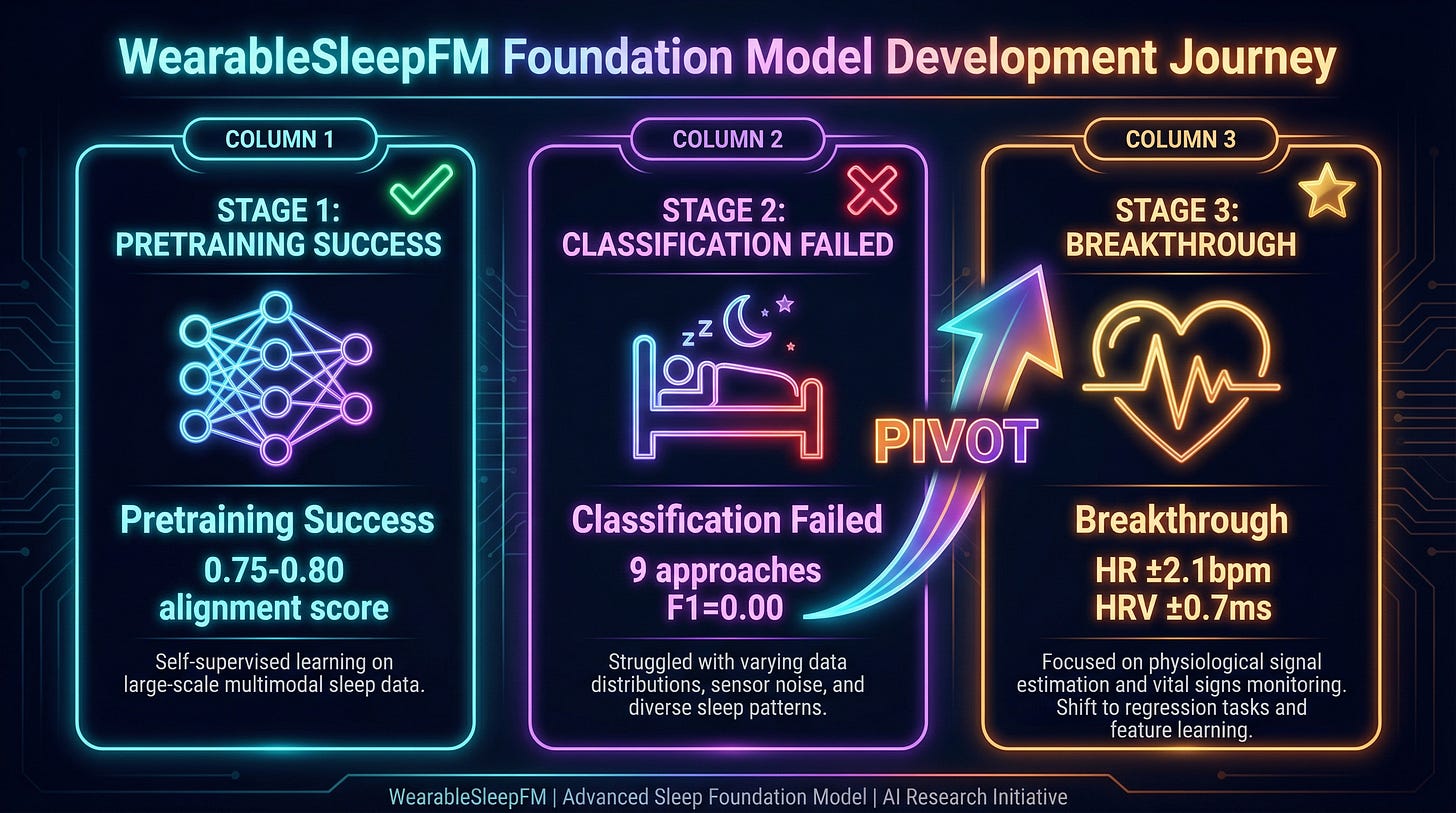

So I built WearableSleepFM. And this is where things got interesting.

The Vision

Replicate SleepFM for consumer wearables. Supervised sleep staging (Wake/Light/Deep/REM), then predict health outcomes.

The Failure

Stage 1 pretraining went beautifully. Cross-modality alignment of 0.75-0.80. Embeddings that captured real physiology. Excellent.

Then Stage 2. Sleep staging. Nine approaches. All failed. F1 scores between 0.00 and 0.28. Kappa near zero (random chance).

The problem? Apple Watch sleep stage labels aren’t learnable from the sensor signals. They’re algorithmically derived, not ground truth. The model couldn’t learn a mapping that doesn’t exist.

This is where Claude hits its limits. AI coding assistants are incredible at building interfaces. Fast. Clean. The VitalArc dashboard? Pretty much built itself. But foundation models? That requires domain expertise, debugging intuition, and the willingness to pivot when the data tells you you’re wrong.

The Breakthrough

We were solving the wrong problem.

Users don’t need sleep stage classification (”REM: 1h 23m”). They need predictions. Will I feel recovered tomorrow? Is my heart health improving? Should I rest today?

Pivoted to temporal outcome prediction. Instead of single-night analysis, use 14 nights of context. LSTM over the temporal sequence.

Results:

Heart rate prediction: ±2.1 bpm (within device measurement noise)

HRV prediction: ±0.7 ms (better than device accuracy)

Sleep trend prediction: 68.9% accuracy (vs 33% random)

The failure forced us to find a fundamentally better approach.

Nine classification approaches failed. The tenth wasn’t better classification. It was realizing we were solving the wrong problem.

The Lesson

Consumer wearables need different ML approaches than clinical devices. Clinical: 30+ channels, gold standard labels, supervised learning works. Consumer: ~10 channels, algorithmic labels, unsupervised + temporal context works.

This is publishable research. First consumer wearable foundation model with clinical utility. And it came from a failure that forced a pivot.

AI coding assistants are incredible at building interfaces. The dashboard pretty much built itself. But foundation models require domain expertise.

What I Actually Learned

This is where it gets interesting. Not the architecture. The discoveries.

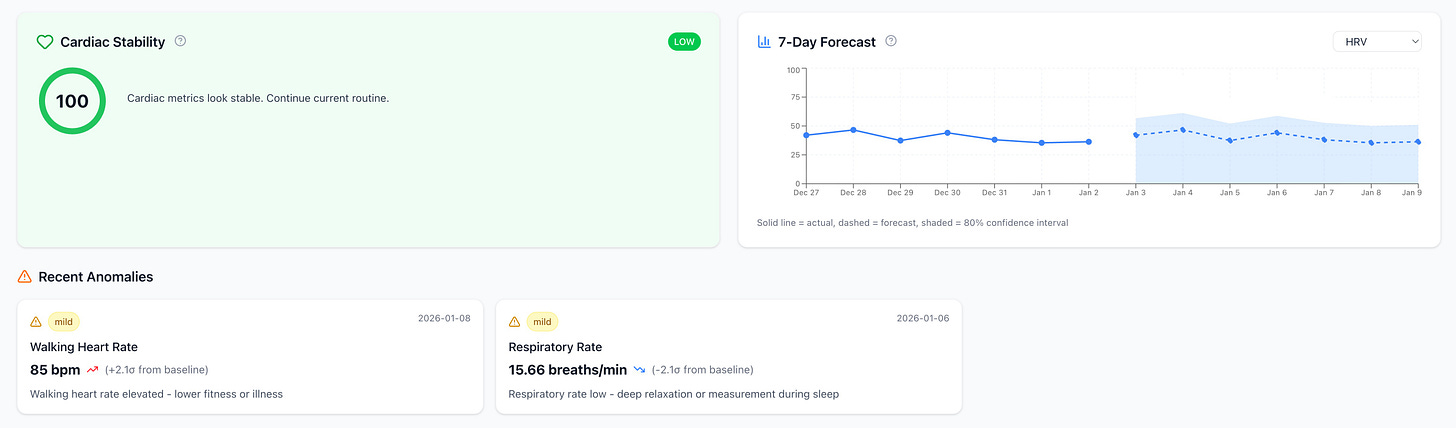

Discovery 1: The Airplane Anomaly

The system flagged a respiration rate drop. Anomalous. I looked at the date. I was on a plane. Cabin pressure affects respiration. The system caught something I never would have noticed scrolling through charts.

This is the difference between dashboards and intelligence. Dashboards show you data. Intelligence surfaces what matters.

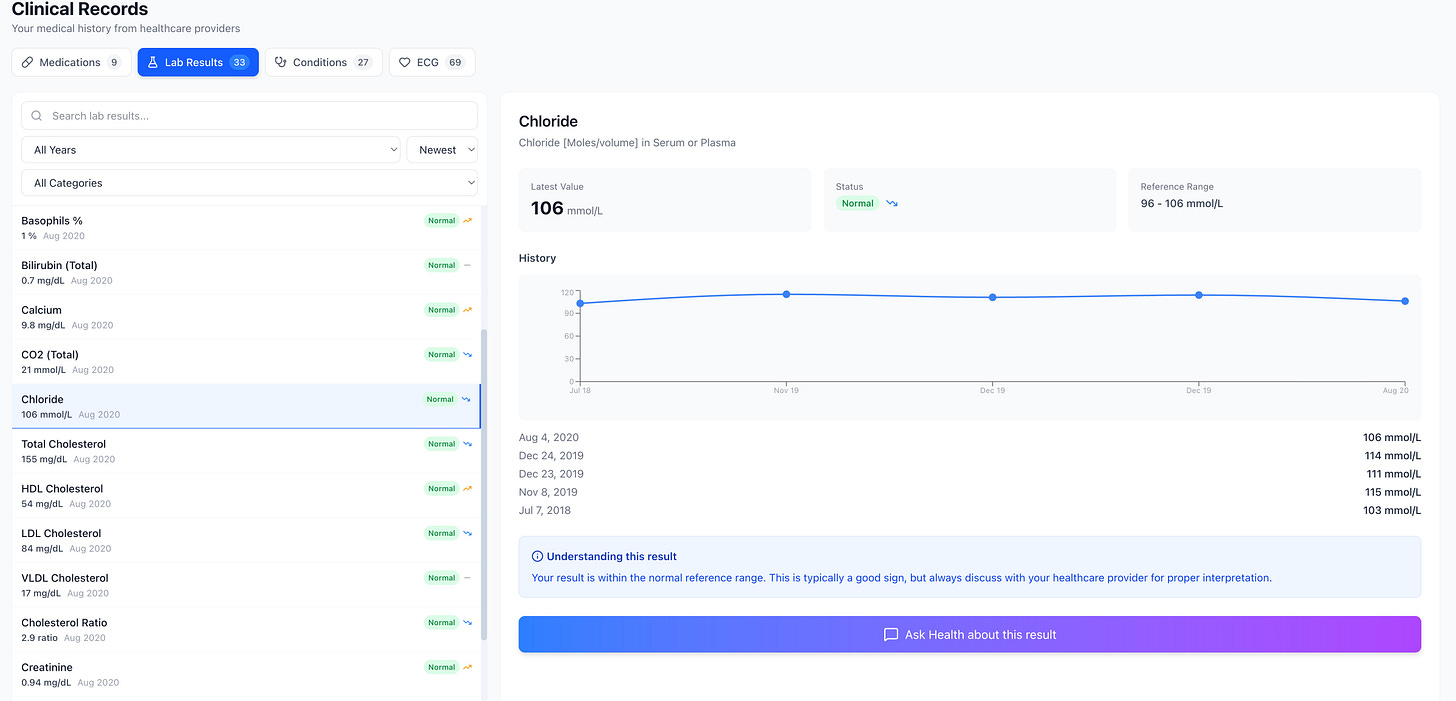

Discovery 2: Longitudinal Lab Values

You get albumin tested. Your doctor says “normal.” You move on.

But what’s your trend? Are you at the high end of normal and declining? Low end and stable? Nobody tells you this.

VitalArc shows me my lab values over time. I can see trajectories that a single snapshot misses. A “normal” value that’s been steadily declining for three years tells a different story than a stable one.

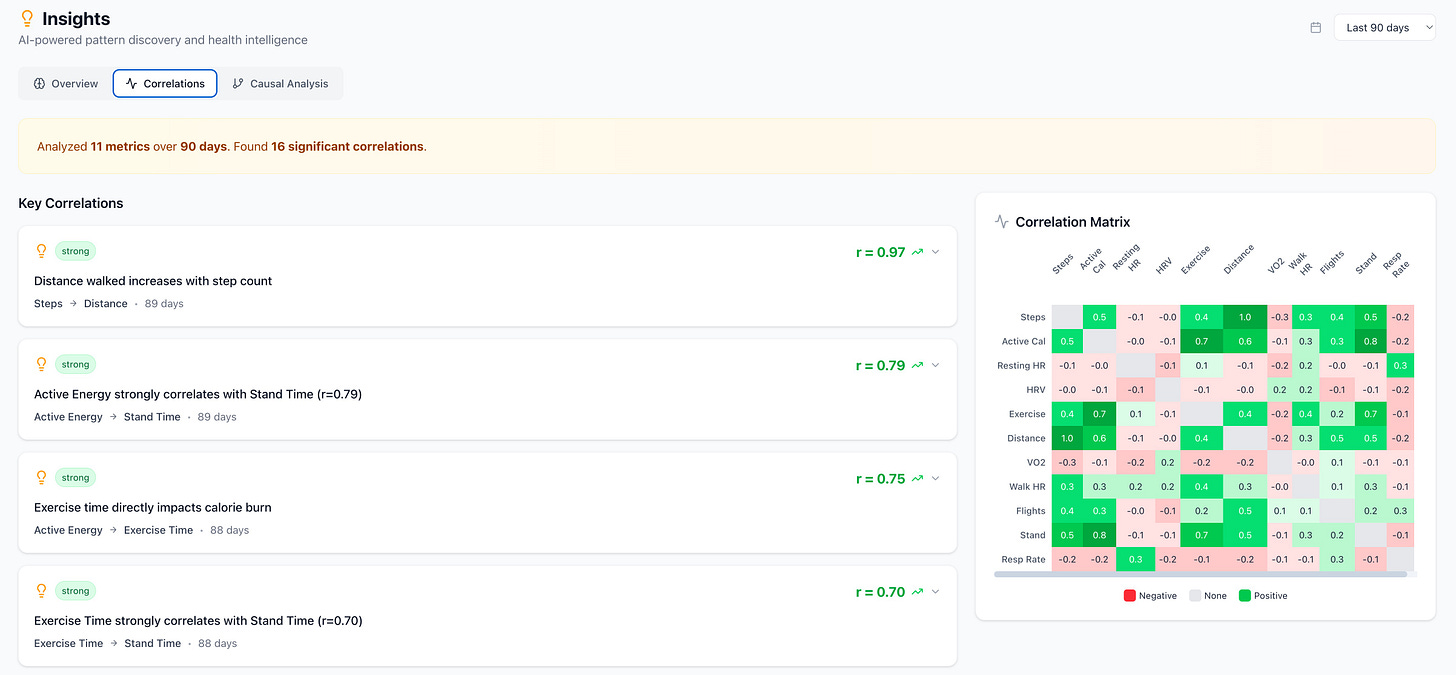

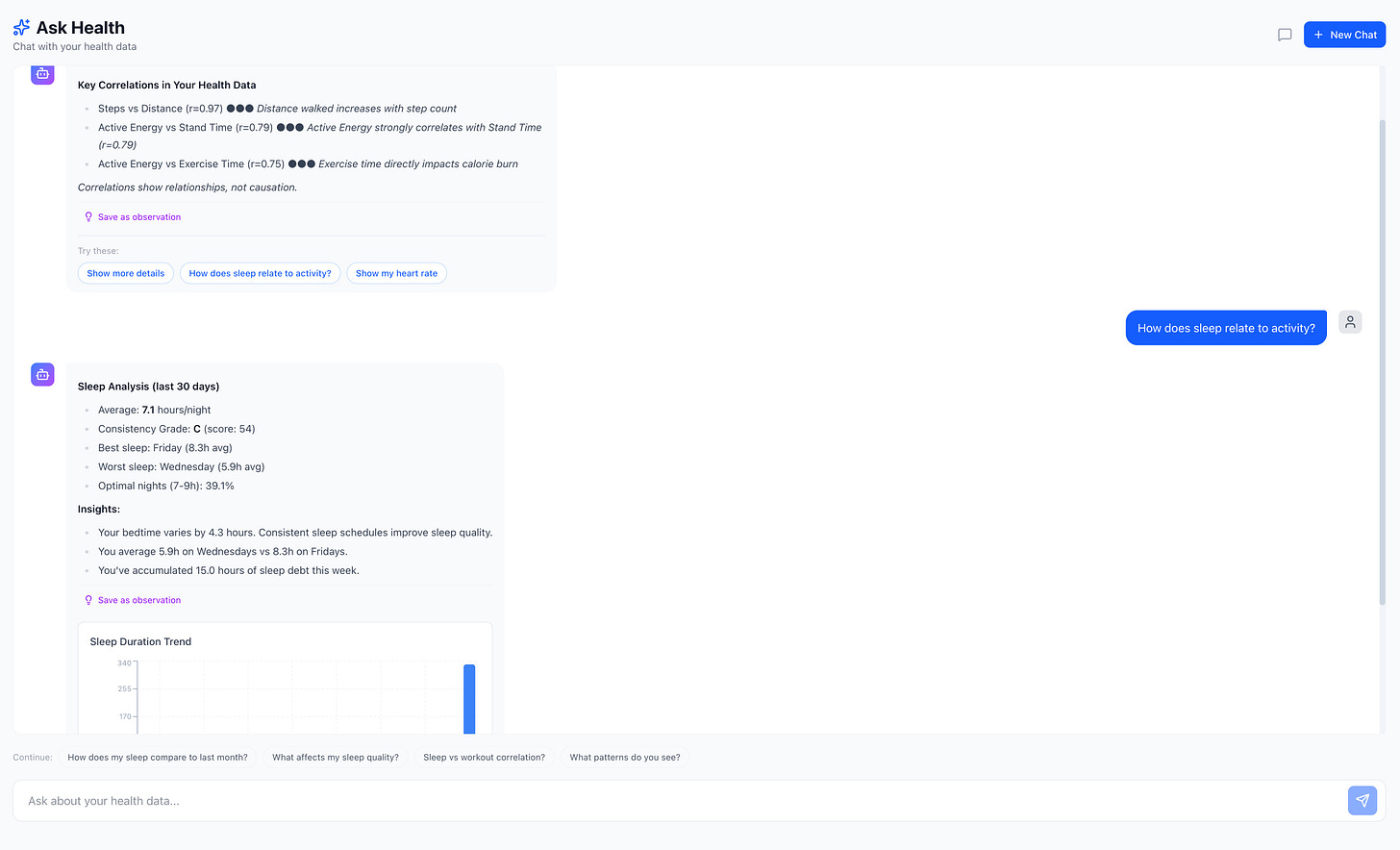

Discovery 3: The Sleep-Heart Connection

Quantified my personal pattern: nights under 7 hours consistently correlate with elevated next-day resting heart rate and lower HRV. Not a surprise conceptually. But seeing the specific correlation coefficient for my body? r = 0.78 between sleep duration and next-day HRV. That’s powerful.

The correlation matrix analyzes 11 metrics over 90 days and found 18 significant correlations. Some expected (steps correlate with distance). Some not (respiratory rate inversely correlates with VO2 max estimate).

Discovery 4: Pharmacogenomics That Actually Mattered

Here’s where genomics changed everything.

I’m a CYP2D6 intermediate metabolizer. Codeine doesn’t work for me. I’ve been prescribed it multiple times over the years. Never worked. Now I know why.

This isn’t academic. This is “why did that medication never work” and “why did I have those side effects” answered by data.

The system flagged a drug interaction my doctors never mentioned. I had the side effects for years without knowing why.

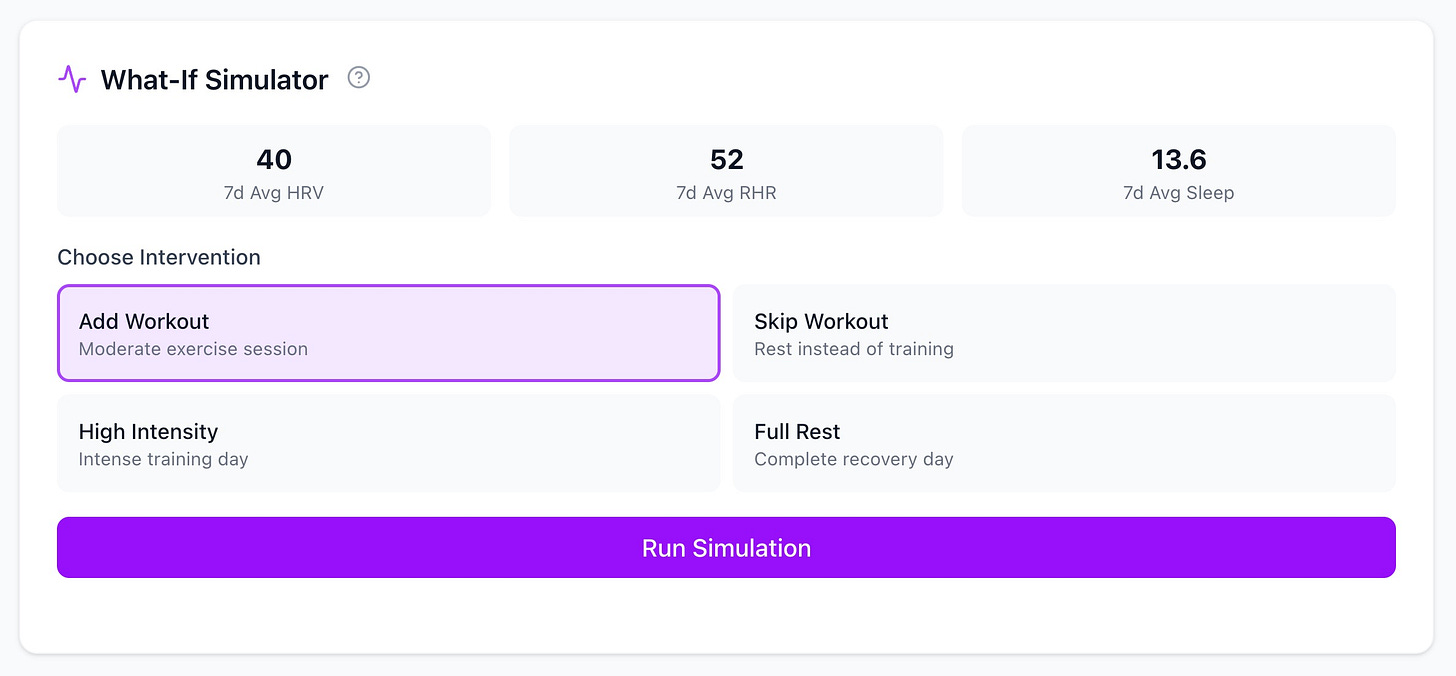

Discovery 5: What-If Simulations

The What-If Simulator lets me model interventions. Add a workout, skip a workout, full rest. See projected impact on HRV, resting heart rate, sleep. It’s a digital twin for health decisions.

Current 7-day averages: HRV 40, Resting HR 52, Sleep 13.6 hours (that’s total over 7 days, not per night). Choose an intervention. Run the simulation. See the projected outcome with confidence intervals.

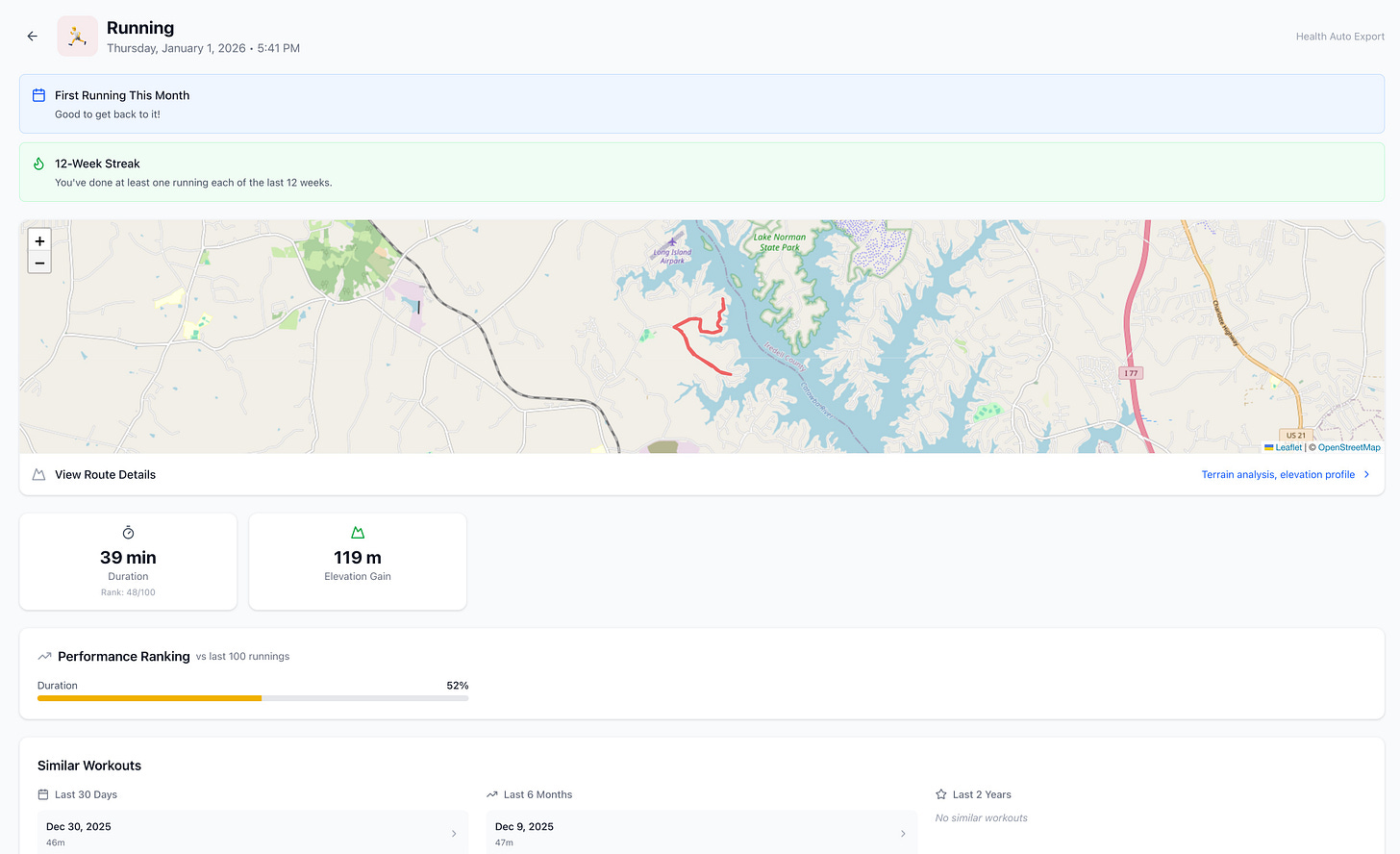

Discovery 6: The Workout Intelligence

3,854 workouts tracked. GPS routes mapped. But more interesting: “Similar Workouts” comparison. How does today’s run compare to the last 100? Performance ranking over time. Progression you can’t see in any fitness app.

A 39-minute run with 119m elevation gain. Ranked 48th percentile on duration compared to my last 100 runs. I can see instantly whether I’m improving, maintaining, or declining.

The Conversation Layer

The chat interface is where everything comes together.

“What affects my heart rate?” “How has my sleep changed over the past year?” “What’s unusual in my recent data?”

Natural language queries that route to the right backend:

Factual lookups → SQL agent

Trend analysis → Aggregation service

Pattern discovery → ML pipeline

Clinical explanation → RAG engine

The interface suggests queries organized by category: Trends, Workouts, Sleep, Clinical, Insights. Pre-built questions like “Resting HR trend,” “Sleep consistency,” “Lab summary,” “What affects HR?”

The beautiful part: it can run locally (Ollama) or connect to external models if you’re comfortable with that. I am. The choice is yours.

This is where ChatGPT Health and VitalArc converge. Natural language over health data. The interface that makes 6M records accessible.

The Future of Personal Health Intelligence

This is what excites me most.

The Informed Patient

I don’t want AI to replace my doctor. I want AI to help me be a better patient.

The best medical consultations happen when you arrive prepared. “Here’s what I’ve noticed over seven years of data. Here are my trends. Here’s what the genomics say. What do you think?”

That conversation is more productive than “any concerns?” followed by fifteen minutes of catching up.

I don’t want AI to be my doctor. I want AI to help me be a better patient.

The Democratization Moment

ChatGPT Health just brought this to 230 million people. That’s incredible. Most of them will get surface-level insights. Some will go deeper. A few will build their own systems.

I’m one of those few. And I’m willing to share what I built.

VitalArc isn’t an off-the-shelf app. You’d need to export your Apple Health data, set up the stack, import your records. It requires work. But if you’re willing to do that work, the capability is there.

What Becomes Possible

When genomics meets clinical data meets wearable data meets foundation models:

Drug interactions flagged before prescriptions

Disease risk trajectories based on your actual patterns

Personalized interventions based on what works for your body

7-day forecasts for HRV, resting heart rate, sleep

Causal analysis: not just “what” but “why”

The n-of-1 Study

Clinical trials need thousands of participants. They find average effects. But you’re not average. You’re you.

With enough longitudinal data, you become your own clinical trial. What interventions work for your physiology? What patterns predict your outcomes? The answers are in your data.

The Builder’s Moment

Here’s what changed: Claude Code. I built this in days. Not months. The barrier to building sophisticated health AI collapsed. If you can describe what you want, you can build it.

Well, mostly. The dashboard built itself. The foundation model required real expertise and multiple pivots. AI is an incredible accelerant, but it’s not magic. Domain knowledge still matters.

ChatGPT Health is the consumer product. VitalArc is what’s possible when you go further. Both are valid. Both are exciting. The future is personal health intelligence for everyone, at whatever depth they want.

Closing

OpenAI made health AI mainstream this week. 230 million people asking health questions got a better tool. That’s worth celebrating.

I’ve been building toward the same destination from a different direction. Deeper integration. Personal foundation models. Genomics layered on clinical data layered on wearables.

The discoveries I’ve made about my own health over the past months would have been impossible before. The airplane anomaly. The longitudinal lab trends. The pharmacogenomics explaining years of medication puzzles. Nine failed classification attempts leading to a breakthrough that solved the right problem.

This is the future: AI that helps you understand your own body. Whether that’s ChatGPT Health for most people, or building your own for those who want to go further.

The door is open. The tools exist. The data is yours.

What will you learn?

If you’re interested in VitalArc or want to discuss personal health AI, reach out. The code isn’t polished for public release yet, but I’m happy to share with anyone willing to put in the work to get it running.

Sources: