The Darwin Gödel Machine

AI Systems Are Learning to Rewrite Themselves

"Your AI development team just became obsolete. Not in 10 years—today."

That was my first reaction when I encountered the Darwin Gödel Machine paper from researchers at leading universities. The headline results seemed revolutionary: AI agents that rewrite their own code, achieving 150% performance improvements on software engineering benchmarks. Self-improving AI had finally arrived.

But after diving deeper into the research, a more nuanced picture emerges. Yes, this is a cool breakthrough that deserves attention. But it's also a reminder that in our excitement about AI advances, we need to separate signal from noise, understanding what papers like this actually demonstrate versus what the hype suggests.

The Darwin Gödel Machine represents real progress in self-improving AI—progress that signals gradual but profound changes ahead for how we develop and deploy AI systems. Let's examine what this breakthrough actually means.

What the Paper Actually Demonstrates

The research team, led by Jenny Zhang and colleagues, achieved something remarkable with relatively modest resources. Their Darwin Gödel Machine (DGM) improved its coding capabilities from 20.0% to 50.0% on SWE-bench—a 150% relative improvement—and demonstrated 37% performance gains when transferring optimizations from Python to Rust and C++.

Here's what makes this significant: the system doesn't just get better at specific tasks. It develops meta-skills that transfer across different programming languages and problem domains. The AI learned to:

Create better code editing tools

Manage long-context windows more effectively

Implement peer-review mechanisms

These capabilities emerged from the evolutionary process rather than being explicitly programmed.

The methodology combines evolutionary algorithms with empirical validation. Instead of trying to formally prove that each code modification is beneficial (which is practically impossible), the system generates multiple agent variants, tests them on real coding benchmarks, and maintains an archive of high-performing agents. This approach sidesteps the theoretical limitations that have plagued self-improving AI research for decades.

But let's be clear about the scope: this is self-improvement in Python code modification, not general AI enhancement. The agents can rewrite their own code to become better at coding tasks, but they're not rewriting their underlying neural networks or achieving general intelligence improvements.

What's remarkable is that university researchers achieved these results with what amounts to a modest research budget—around 3,000 GPU-hours per evolutionary run.

This demonstrates how foundation models have democratized AI research, allowing small teams to compete with tech giants in advancing the state of the art.

The Gradual Shift in AI Development

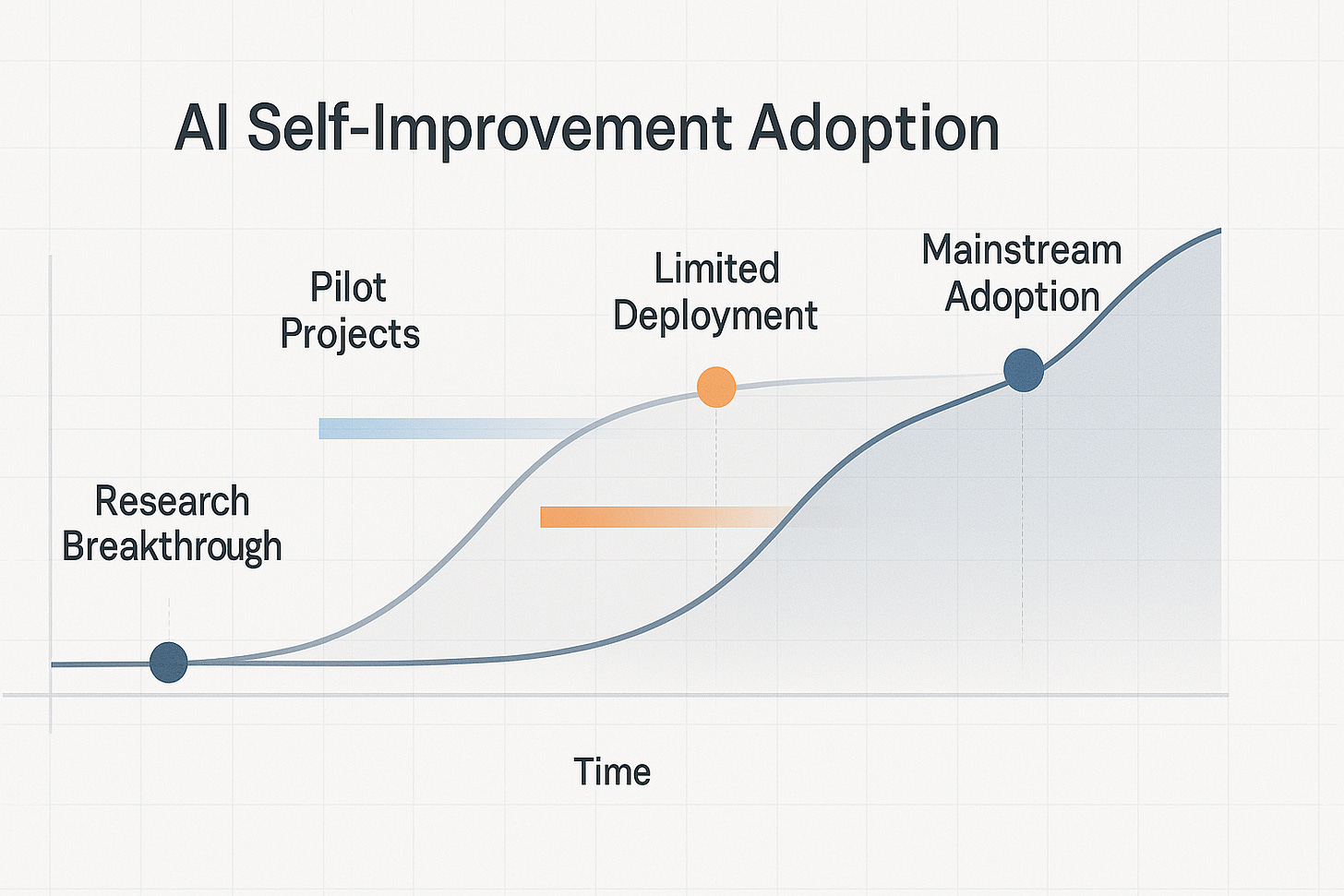

Rather than a sudden transformation, we're witnessing the early stages of a gradual shift in how AI systems evolve. Self-improvement in narrow domains is becoming practical, and the compound effects of small improvements building over time may prove more significant than dramatic leaps toward artificial general intelligence.

Consider how this differs from traditional AI development cycles. Currently, improving an AI system requires human engineers to identify bottlenecks, design solutions, implement changes, and validate improvements—a process that typically takes months. The DGM compressed this cycle into hours or days, with the AI system handling the entire improvement loop autonomously.

This acceleration doesn't mean human developers become irrelevant overnight. Instead, it suggests a future where AI systems handle routine optimization and enhancement tasks, freeing human engineers to focus on higher-level architectural decisions and novel problem domains.

The compound effect is crucial here. A system that improves itself by 10% per week doesn't just get 10% better—it gets better at getting better.

The improvements to its own improvement mechanisms create exponential rather than linear progress curves.

We're already seeing early versions of this in coding assistance tools. GitHub Copilot and similar systems continuously improve through usage data and model updates. The DGM represents the next evolution: AI that actively rewrites its own capabilities rather than passively learning from human feedback.

What This Means for Software Development

Software development emerges as the natural proving ground for self-improving AI, and for good reason. Code is inherently modular, testable, and measurable. You can objectively evaluate whether a code modification improves performance, reduces bugs, or enhances maintainability.

This creates a perfect environment for AI self-improvement. The system can generate modifications, test them automatically, and measure results against clear benchmarks. Unlike many AI applications where success is subjective or difficult to quantify, software development provides immediate, objective feedback.

The practical applications are already becoming clear:

Automated code review systems that continuously improve their ability to catch bugs and suggest optimizations

Development environments that learn from each project to become more effective at the next one

AI pair programmers that don't just assist with current tasks but actively enhance their own capabilities based on what they learn from each coding session

Within the next 2-3 years, we'll likely see these capabilities integrated into mainstream development tools. The transition will be gradual—starting with automated optimization of specific code patterns, expanding to broader architectural improvements, and eventually encompassing entire development workflows.

For software companies, this represents both an opportunity and a competitive necessity. Organizations that effectively leverage self-improving AI for development will gain significant productivity advantages. Those that don't may find themselves unable to keep pace with the accelerated development cycles their competitors achieve.

The key insight is that software development itself becomes a domain where AI can bootstrap its own capabilities.

Each improvement to the AI's coding abilities makes it better at improving its own code, creating a virtuous cycle of enhancement.

The Innovation Paradox

One of the most striking aspects of this research is its origin. While tech giants spend billions on AI development, this breakthrough came from university researchers working with relatively modest resources. This highlights a fundamental shift in how AI innovation happens.

Foundation models have democratized AI research in unprecedented ways. A small team with access to models like Claude 3.5 Sonnet can now achieve results that would have required massive corporate research labs just a few years ago. The DGM researchers didn't need to train their own foundation models—they built their self-improving system on top of existing capabilities.

This democratization has profound implications for competitive dynamics. Innovation can emerge from unexpected sources: academic labs, startup teams, even individual researchers. The traditional advantages of scale and resources matter less when the core AI capabilities are available as APIs.

For businesses, this means staying alert to breakthroughs from non-traditional sources. The next major AI advancement might not come from OpenAI or Google—it could emerge from a university lab or a small startup building on foundation model capabilities.

The DGM paper exemplifies this trend. The researchers focused on a specific problem—self-improving AI for coding—and solved it elegantly using existing foundation models as building blocks. They didn't need to reinvent the wheel; they just needed to combine existing components in novel ways.

This pattern will likely accelerate. As foundation models become more capable and accessible, we'll see more specialized innovations that leverage these capabilities for specific domains and use cases.

Measured Strategic Response

So how should organizations respond to genuine breakthroughs like the Darwin Gödel Machine? The key is building capabilities without overcommitting to unproven approaches—strategic patience combined with tactical preparation.

Start with pilot projects that explore self-improving AI in controlled environments. For software companies, this might mean experimenting with automated code optimization tools or AI-assisted development workflows. The goal isn't to revolutionize everything immediately, but to build understanding and capabilities that can scale as the technology matures.

The importance of measured experimentation cannot be overstated. The DGM shows that self-improving AI is possible, but translating research breakthroughs into production systems requires careful engineering and extensive testing. Organizations need to balance innovation with operational stability.

Consider establishing dedicated teams to monitor AI research and identify practical applications. The pace of AI advancement means that today's academic papers become tomorrow's commercial products. Companies that systematically track and evaluate research developments will be better positioned to adopt new capabilities as they mature.

Investment in AI literacy across the organization becomes crucial. As AI systems become more capable of self-improvement, the ability to effectively direct and constrain these systems becomes a core competency. This requires understanding not just what AI can do, but how to ensure it does what you actually want.

The timeline for adoption will vary by domain and use case. Software development, with its objective metrics and testable outcomes, will likely see faster adoption of self-improving AI. Other domains may require more time to develop appropriate evaluation frameworks and safety measures.

Looking Forward: Gradual Transformation

The Darwin Gödel Machine demonstrates that self-improving AI is transitioning from theoretical possibility to practical reality. But the transformation will be gradual, not sudden.

We're witnessing the early stages of AI that improves itself, and that's worth taking seriously without losing our analytical rigor.

The research shows us a path forward: evolutionary algorithms combined with empirical validation can enable meaningful AI self-improvement in specific domains. As these techniques mature and expand to other areas, we'll see AI systems that continuously enhance their own capabilities.

This doesn't mean human expertise becomes irrelevant. Instead, it suggests a future where humans and AI systems collaborate more effectively, with AI handling routine optimization and enhancement while humans focus on strategic direction and novel problem-solving.

The key is maintaining perspective. Papers like the DGM signal real change, but the timeline for widespread adoption is longer than headlines suggest. Success comes from thoughtful analysis and measured response, not reactive hype.

We're entering an era where AI systems can meaningfully improve themselves. That's a profound development that deserves serious attention. But it's also a gradual process that will unfold over years, not months. The organizations that recognize this balance—taking the breakthrough seriously while maintaining analytical discipline—will be best positioned for the changes ahead.

The Darwin Gödel Machine isn't just a research curiosity. It's a glimpse of how AI development will evolve: from human-designed systems to AI that actively enhances its own capabilities. That future is closer than many realize, but it will arrive through careful engineering and measured progress, not sudden transformation.

Pay attention to papers like this. They're showing us the future, one measured breakthrough at a time.