NVIDIA CES 2025: Through the Lens of AI Research Tools (Claude, chatGPT, Gemini, DeepSeek)

From Simple Summaries to Deep Technical Analysis

TLDR: Jensen's Big Five

Next-Gen Gaming: RTX 50 Series GPUs featuring Blackwell architecture, with the 5090 boasting 92B transistors

Physical AI: Evolution beyond perception and generative AI to systems that can reason and act

Desktop Supercomputing: Project DIGITS bringing $3K personal AI powerhouse

Robotics Revolution: Cosmos platform for photorealistic robot training

Automotive Future: Major partnerships with Toyota and Aurora for autonomous vehicles

Here is the summary for Google Gemini - Deep research: https://g.co/gemini/share/64335ee30e9e

Part 1: Breaking Down the Keynote

Jensen Huang took us on a journey through NVIDIA's vision of an AI-powered future, and what a ride it was! Let's walk through the key moments chronologically:

The Evolution of AI

Huang started by mapping AI's trajectory - from the early days of perception AI (understanding images and sounds) to today's generative AI boom. But the real bombshell? We're entering the era of "physical AI" - systems that don't just perceive or generate but can reason, plan, and act in the real world.

Gaming Meets AI

The RTX 50 Series reveal was pure NVIDIA style. The flagship 5090 isn't just a GPU; it's a beast with 92 billion transistors pumping out 3,352 trillion AI operations per second. Add FP4 computing support, and you've got AI models running locally with a smaller footprint than ever before.

Democratizing AI

Project DIGITS might be the sleeper hit of the keynote. A $3,000 desktop AI supercomputer? That's democratization of AI in hardware form. It's designed to run models up to 200B parameters - bringing research-grade capabilities to developers' desks.

Robotics Renaissance

The Cosmos platform announcement showed NVIDIA's cards for the robotics industry. Think ChatGPT moment but for robots. Cost-efficient, photorealistic simulations mean we can train robots without the expensive real-world data collection dance.

Part 2: The AI Research Showdown

Let's get honest about AI research capabilities! Having spent countless hours working with various AI models, I was fascinated to see how each approached the same challenge: analyzing Jensen's CES keynote. The differences weren't just interesting—they were revealing.

The Contestants:

Google Gemini 1.5 Pro: The research powerhouse

Claude 3.5 Sonnet: The articulate analyst

ChatGPT 4.0: The quick summarizer

Deepseek: The technical specialist

First Impressions Matter

When asked about the CES keynote, the responses were telling:

ChatGPT offered a quick, surface-level summary

Claude politely acknowledged its knowledge cutoff

Deepseek pulled some technical specs but missed context

Gemini... well, Gemini went full researcher mode!

Deep Dive Comparison

Core Capabilities

After testing them all on the NVIDIA keynote (and trust me, I've spent way too many hours playing with these!), I noticed each has its sweet spot:

Practical Applications

But here's the real deal: Every powerful tool has its limits. Understanding these helps us use them more effectively!

Limitations

The Real Game-Changer

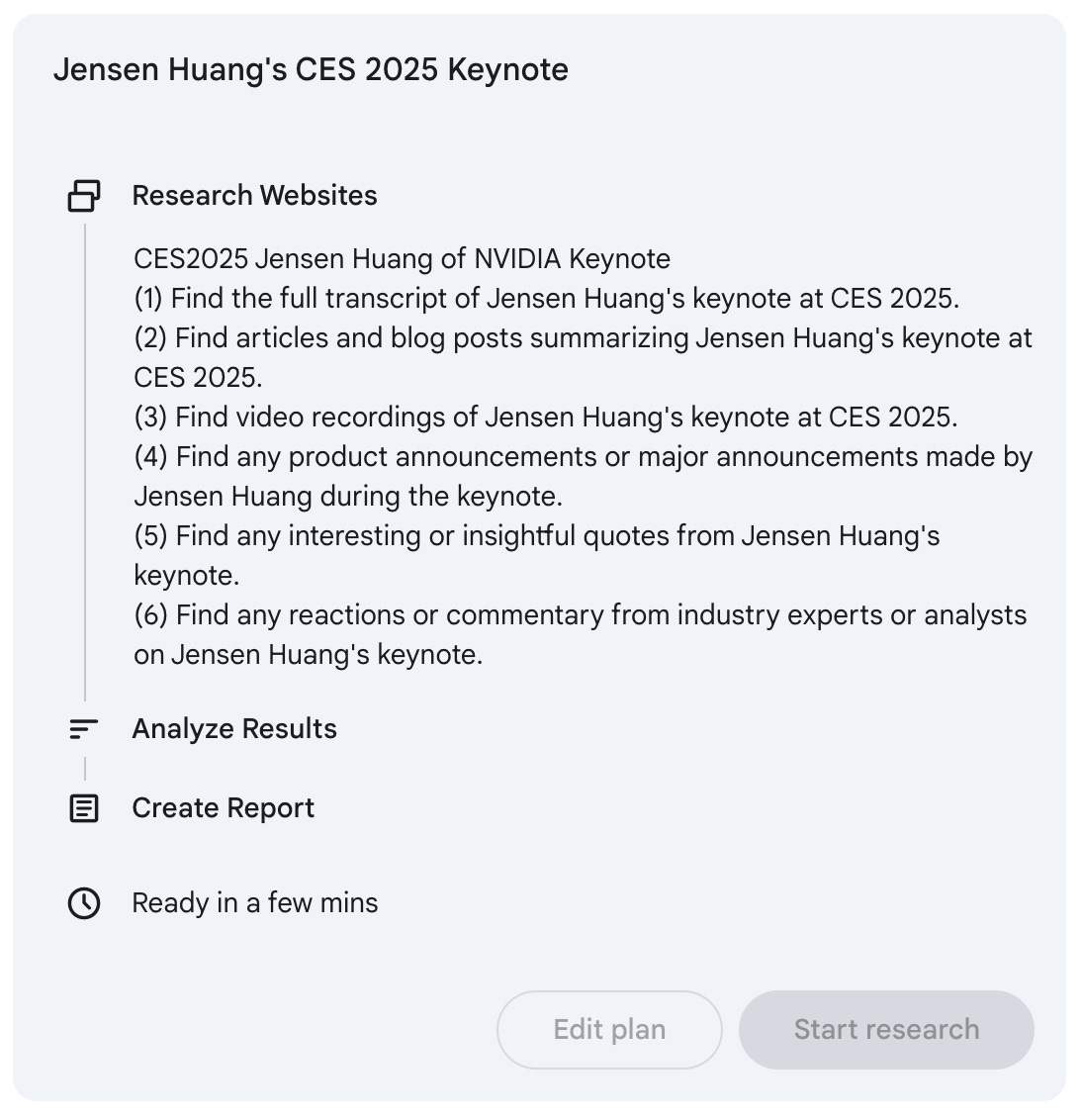

What truly sets Gemini's research capabilities apart isn't just the access to information—it's the methodology. While others gave us the "what," Gemini showed us the "how" and "why." Its approach mimics what I do when researching a complex technical topic:

Planning Phase: Instead of jumping straight to answers, it laid out a research strategy

Source Diversity: Not just official press releases, but technical blogs, video transcripts, and expert analyses

Validation: Cross-referencing facts across multiple sources

Synthesis: Turning raw data into a coherent narrative

Real-World Impact

This represents a significant shift for those of us in technical content creation. Imagine cutting your research time in half while doubling your source quality. That's not just an increment in efficiency—it's a transformation in how we can approach technical writing and analysis.

Part 3: Google's Deep Research Magic 🎩

Here's where things get interesting. Google's approach to research isn't just about collecting links - it's about mimicking human research methodology:

The magic lies in the structured approach:

Initial Planning: Setting clear research goals and parameters

Multi-Source Research: Gathering data from diverse, credible sources

Validation & Analysis: Cross-referencing and pattern identification

Synthesis: Creating a coherent narrative from complex data

What sets Google's deep research apart is its ability to:

Execute a systematic research plan

Validate information across multiple sources

Synthesize complex technical information into clear narratives

Provide comprehensive citations and source tracking

The Multimodal Magic: Gemini's Research Superpower 🔍

Here's what fascinates me most - Gemini's approach to research isn't just comprehensive; it's transformative in how it handles different types of content.

Remember when we had to manually:

Watch keynote videos 📹

Read through press releases 📄

Check tech blogs 💻

Cross-reference specifications 📊

Validate against expert commentary 🎯

Scan Reddit and tech communities for real-world feedback 💬

Gemini synthesizes all these different media types into coherent analysis. What really gets me excited is how it treats each source type as a unique piece of the puzzle:

Video transcripts -> Key quotes and context

Technical blogs -> Specifications and technical details

Press releases -> Official announcements

Expert commentary -> Industry perspectives

Community discussions -> Real-world validation and sentiment

This isn't just web searching - it's digital anthropology! The ability to understand and synthesize information across formats while validating against expert communities gives us a richer, more nuanced understanding of tech developments.

Also, to be fair to Claude, I wouldn't use anything else to help me craft the blogs I write. Each really does bring something different to the table, and I will be using all four for now…