Cancer AI Alliance: When Federated Learning Finally Works

Four cancer centers did in 12 months what the industry couldn't do in 10 years. Here's what actually changed.

Four of the nation's leading cancer centers just did something remarkable. Dana-Farber, Fred Hutchinson, Memorial Sloan Kettering, and Johns Hopkins launched the Cancer AI Alliance (CAIA) this week. They built a platform that trains AI models on over a million patient records without sharing a single raw data file.

The kicker? They did it in 12 months.

Federated learning has been theoretically elegant for a decade. Industry conferences have been full of presentations promising it would solve data sharing problems. But implementations at scale? Rare. CAIA shipped in a year and already has eight research projects running.

So what changed? Why now, after years of hype?

The Promise That Kept Failing

The pitch for federated learning has always been compelling. Models travel to the data instead of data traveling to models. Each institution keeps patient records behind its firewall. The AI learns locally and returns only insights. Those insights aggregate into a stronger shared model.

No pooled database. No regulatory nightmares. No compliance violations.

It made perfect sense in PowerPoint. It kept failing in practice.

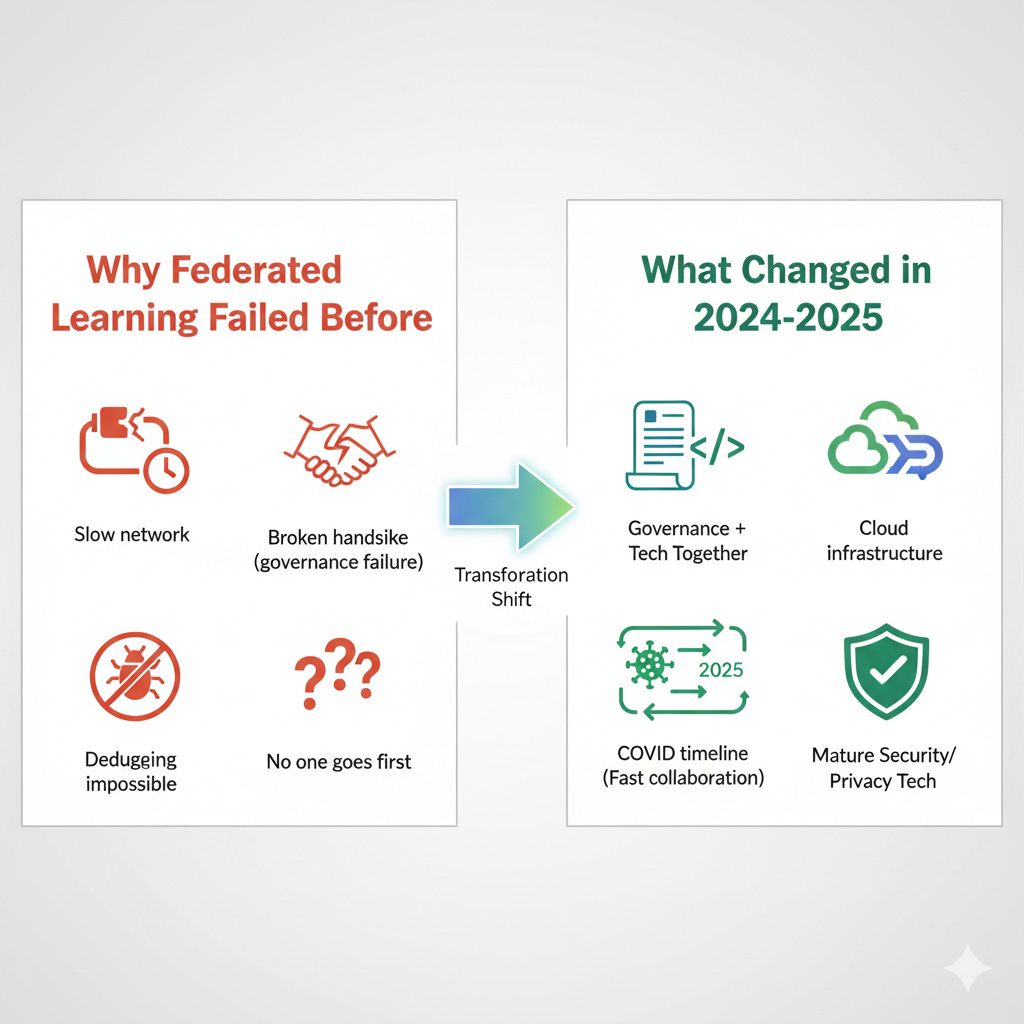

The problems were consistent:

Too slow. Early implementations couldn't handle the latency. Training cycles that took hours centrally took days or weeks in federated mode. Models would drift across sites. Convergence was unreliable.

Governance was vaporware. Everyone agreed on the theory but no one could agree on the legal framework. Who owns the trained model? How do you audit what it learned from where? What happens when one site's data quality is terrible? These questions killed projects in legal review.

No one wanted to go first. Classic chicken and egg problem. Building federated infrastructure is expensive. You need multiple partners to justify the cost. But partners won't commit until infrastructure exists.

Debugging was impossible. When a centralized model fails, you can inspect the training data. When a federated model fails, good luck figuring out which site's data caused the problem without violating privacy guarantees.

This wasn't just an incentive alignment problem. The infrastructure genuinely wasn't ready.

What Actually Changed

CAIA worked because several things converged at once.

The infrastructure matured

Secure enclaves and confidential computing moved from research papers to production systems. AWS Nitro Enclaves, Google Confidential Computing, Azure Confidential VMs all ship today. Network speeds improved. Model compression techniques got better. Privacy-preserving ML tools like differential privacy and secure multi-party computation became practical, not just theoretical.

Governance got built alongside the tech

Most projects try to build the technology first, then figure out governance. CAIA inverted this. They created unified technical, legal, and governance structures simultaneously. That's why it took a year instead of six months. The governance framework was as much of the deliverable as the code.

COVID created institutional muscle memory

Academic medical centers proved they could collaborate fast when it mattered. Vaccine trials, treatment protocols, data sharing for public health all happened at unprecedented speed. That experience translated directly to CAIA.

As Brian Bot, director of CAIA's coordinating center, put it: "It cannot be overstated how momentous it is that we came together to launch this platform in just one year."

Big tech needed a flagship deployment

AWS, Google, Microsoft, and NVIDIA didn't contribute infrastructure out of charity. They needed a high-profile, ethically unquestionable use case to prove their federated learning products work at scale. Cancer research gave them the perfect sandbox. PR upside, technical validation, and minimal downside risk.

Regulatory pressure forced innovation

GDPR in Europe, data localization laws globally, and HIPAA in the US all made centralized data pooling harder. That constraint drove real innovation in privacy-preserving techniques. Necessity, mother of invention, etc.

According to Jeff Leek, Chief Data Officer at Fred Hutch and scientific director of CAIA, the platform could accelerate breakthrough discoveries by up to tenfold. That's the claim. Eight projects already running means we'll find out soon if it's real.

The Governance Breakthrough

Here's what most people miss: CAIA succeeded because they solved the governance problem, not because they wrote better ML code.

The Newsweek article nails this:

"For business leaders watching AI's spread across industries, CAIA's launch is less about oncology than it is about precedent. The initiative demonstrates that federated approaches can move from theory to practice when institutions align incentives. It also highlights how governance frameworks can be as innovative as the algorithms themselves."

Federated approaches often get pitched as solutions to data governance challenges across industries. The technical barriers are real but solvable. The organizational barriers? Those kill projects.

The typical objections heard in boardrooms:

"We can't share data across business units due to compliance"

"Our datasets are incompatible"

"Who owns the model once it's trained?"

"What if another division benefits more than we do?"

None of these are technical questions. They're governance questions.

CAIA had to answer:

How are contributions weighted?

What happens if one center's data quality is poor?

How do you audit what the model learned without exposing private data?

What's the incentive for high-quality data contribution?

Who can access the trained models?

How do intellectual property rights work for discoveries made with the shared model?

They figured this out before deploying. That's the innovation.

What Companies Get Wrong

Federated learning often gets pitched as a technical solution to a political problem. Teams show the architecture diagram. They explain the privacy guarantees. They demonstrate the proof of concept.

Then it dies in legal review.

The real issue: compliance teams often see federated learning as more risk, not less. They understand centralized data governance. They have policies for that. Federated learning is new. New means risky. Risky means no.

Business units fear losing control. If the model learns from everyone's data but lives centrally, who really benefits? If marketing and sales train a federated model together, does marketing give up competitive intelligence about customer behavior? These fears are rational.

Without the cancer mission, what's the incentive to contribute your best data to a shared model?

That's where CAIA has an advantage most organizations don't. Nobody at Dana-Farber cares about competitive advantage over Memorial Sloan Kettering when the goal is curing kids with leukemia. The mission aligns incentives in a way quarterly earnings reports never will.

Can This Work Beyond Cancer?

That's the question worth exploring.

Other disease areas

Cardiovascular disease, rare diseases, mental health all have similar data fragmentation problems. But do they have the same urgency and mission clarity that cancer does? Alzheimer's might. Diabetes might. But the further you get from life-threatening acute conditions, the harder it gets to align institutional incentives.

Inside companies

The skepticism is warranted. Organizations don't have a shared mission that overrides departmental politics. A manufacturing optimization use case doesn't carry the moral weight of curing cancer. That matters for getting legal, compliance, and business leaders to actually take risks on new governance frameworks.

But maybe organizations don't need the cancer mission if they get the governance right. If CAIA can publish their legal and technical frameworks openly, other entities could adapt them. The governance template might be more valuable than the ML pipeline.

Other industries

Finance has similar problems with data silos and regulatory constraints. So does healthcare more broadly. Government services, logistics, any domain where data can't be centralized due to regulation or competition.

The pattern from this week suggests federated learning is moving from theory to infrastructure across multiple domains. CAIA isn't alone:

Bristol Myers Squibb, Takeda, Astex, AbbVie, and J&J launched a federated consortium to train OpenFold3 on protein-small molecule data

Illumina launched BioInsight with federated analysis capabilities for multiomics data

Eli Lilly opened TuneLab to biotech companies using federated approaches

This all happened in the span of a week. That's not coincidence. The infrastructure finally works.

What I'm Watching

Does this scale? CAIA plans to expand beyond the founding four institutions. What happens at 10 centers? 20? Do the governance frameworks hold or do they need constant renegotiation?

Model performance. Federated learning typically underperforms centralized training by 5-10%. Is 95% of central performance acceptable if you get access to 10x more data? The math might work out, but will researchers accept it?

Speed in practice. They claim 10x faster insights. Let's see if the eight running projects actually deliver breakthroughs in months instead of years. That's the real test.

Replication in other therapeutic areas. Will cardiovascular researchers, rare disease groups, or mental health networks build similar alliances? Or is cancer uniquely motivating?

Corporate adoption. Can companies apply this governance model internally? Or does the lack of a shared moral mission make it impossible to get past departmental politics?

Why This Matters

CAIA matters because it's proof that federated learning can work when the pieces align. Infrastructure, governance, timing, and incentives all came together.

The technology was available for years. Cloud platforms, privacy-preserving ML, secure enclaves have been production-ready for a while. What changed was the organizational will to build governance frameworks alongside the algorithms.

As Brenda Young from Slalom said: "When institutions and technology partners move together, breakthroughs stop being the exception and become the rule."

Maybe companies can learn from this. Maybe the governance frameworks are portable even if the mission isn't. Maybe the industry has been focused on the wrong problem and compliance teams just need better templates to evaluate risk.

Or maybe cancer is special. Maybe the mission really does matter that much. Maybe federated learning inside companies needs a different pitch entirely, one that addresses departmental incentives directly instead of assuming everyone wants to collaborate for the greater good.

The jury is still out. But CAIA just gave us a working example to study. That's more than we had last week.